Abstract

The last decade has seen the development of a wide set of tools, such as wavefront shaping, computational or fundamental methods, that allow us to understand and control light propagation in a complex medium, such as biological tissues or multimode fibers. A vibrant and diverse community is now working in this field, which has revolutionized the prospect of diffraction-limited imaging at depth in tissues. This roadmap highlights several key aspects of this fast developing field, and some of the challenges and opportunities ahead.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 4.0 license. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

Introduction

SylvainGigan1 and Ori Katz2

1 Laboratoire Kastler Brossel, ENS-Université PSL, CNRS, Sorbonne Université, Collège de France, 24 rue Lhomond, 75005 Paris, France

2 Department of Applied Physics, Hebrew University of Jerusalem, Jerusalem, Israel

Wavefront shaping in complex media, the high-resolution manipulation of light waves in order to control light in disordered environments, is a relatively young field. Its commonly accepted inception can be traced back to 2007 when Vellekoop and Mosk demonstrated that, via an iterative optimization algorithm, a diffraction-limited focus could be obtained through a visually opaque strongly scattering medium by phase control of thousands of optical modes [1]. However, while being a relatively young field, it builds on decades of experimental works performed in several established fields, from adaptive optics (AO) for astronomy, through holography, phase conjugation, time reversal of ultrasound waves, to RADAR imaging. It also builds on decades of fundamental insight from mesoscopic physics. Although the exact boundary between AO and wavefront shaping is still rather fuzzy, one possible way to define the crossover is whenever low-order mode corrections (in the Zernike basis) are not sufficient anymore, corresponding to at least a few scattering mean free paths. However, wavefront shaping is essentially nothing more than AO in an extreme regime of wave perturbation.

Deep imaging in complex environments is a hugely important challenge, from noninvasive biomedical investigations to seeing through fog. However, due to the highly scattering nature of many real-world samples, direct imaging in complex samples, such as biological tissues, is conventionally limited to shallow depths of a few hundred microns. While other imaging modalities, such as ultrasound, magnetic resonance imaging (MRI), and x-rays, can penetrate deeper into biological tissue, they are inferior to optical microscopy in terms of resolution (limited by the wavelength of light), variety of contrast mechanisms (e.g. chemical sensitivity, and functional information), or their non-ionizing nature. Wavefront shaping offers a unique possibility: to achieve optical resolution focusing and imaging, without being limited by the exponential decay of ballistic photons with depth. It is relatively safe to say that, to obtain micrometer or submicrometer resolution images deep in complex media, retrieving information from scattered light (either through physical wavefront control or through computation) is the only way to go.

One of the main reasons for the fast recent progress in the field of wavefront shaping is the great technological advances in both digital modulators and detectors. Spatial light modulators (SLMs) based on a variety of technologies, from liquid crystals through micro-electro-mechanical systems to acousto-optic modulators, now allow one to ramp up the number of controlled modes and speed. The progress in cameras and detector technologies has also had a great impact in the field due to several now widely available devices from multi-megapixel fast cameras, through ultrasensitive electron-multiplying CCDs (EMCCDs) and sCMOS detector technologies, to single-photon detector arrays.

The great advancements in modulators and detectors come hand in hand with the now available computational power, data bandwidth and memory, which are required to digitally transfer and process the huge amounts of scattered light information. The field also naturally strongly benefits from the current advancements in signal processing and AI revolution, with the emergence of deep learning (DL). In some instances, these advancements in signal processing and machine learning algorithms allow one to simplify or even shortcut the stringent requirements of wavefront shaping, both in terms of number of measurements and even in the need for a wavefront shaping device.

Simultaneously, the field continuously revisits and makes use of theoretical concepts from mesoscopic physics to improve imaging and light delivery deep inside visually opaque samples. A first example is the transmission matrix, initially a theoretician concept, which became a versatile tool for imaging once it was demonstrated that it could be effectively recorded. Another notable example for imaging is the optical 'memory effect,' a correlation of multiply scattered waves predicted in the 1980s. It was proposed for imaging (and other tasks) as early as the 1990s in a visionary work by Freund [2]. However, it is only in the last decade that its practical potential has been realized and put into practice thanks to wavefront shaping. Very recently, fundamental concepts such as open channels, or time-delay eigenstates, have been increasingly studied in the context of imaging and light control in complex media.

The applications of wavefront shaping now span well beyond simple focusing and imaging inside complex media: it has been employed to allow looking around corners, for energy delivery through opaque samples, for trapping and optical manipulation, near-field imaging, plasmonics, spectroscopy, and ultrafast pulse shaping; virtually all aspects of photonics have been explored in combination with wavefront shaping. One specifically important extension of wavefront shaping is its application for light control through long multimode and multicore fibers, which has emerged as an extremely fruitful path. In particular, it allows the development of miniature lensless endoscopes for deep imaging, now a very active subfield.

In terms of imaging modalities, wavefront shaping has been experimentally demonstrated in essentially every widespread optical modality (most often in proof of principle experiments): these include confocal imaging, multiphoton imaging, photoacoustics, acousto-optics, phase contrast, optical coherence tomography, fluorescence imaging, structured illumination, temporal and spectral control, Raman, and other spectroscopic techniques. Interestingly, optical super-resolution techniques, such as STED, PALM, and STORM, still remain to date largely unexplored in the scattering regime, although significant progress in aberrating specimens using AO has been reported [3].

By and large, the field is now relatively mature, but remains very active and shows no sign of slowing down in terms of innovation; one can cite very recent progress such as fluorescence-based incoherent transmission matrices, applying DL approaches to image reconstruction, and the emergence of novel ultrafast SLMs, to cite just a few salient results. It also focuses more and more on applications in real-world samples rather than basic proof of principle demonstrations.

In this roadmap we highlight, from multiple and different perspectives, many of these recent advances as well as the challenges and opportunities that the field may offer in the years to come.

1. Three-dimensionally resolved fluorescence microscopy in deep-scattering biological tissue

Aaron K LaViolette and Chris Xu

School of Applied and Engineering Physics, Cornell University, Ithaca, NY, 14853, United States of America

Status

One-photon (1P) confocal, two-photon (2P) and three-photon (3P) microscopy provide three-dimensionally resolved, high-spatial-resolution fluorescence imaging in scattering biological tissues. 1P confocal microscopy is typically only applicable within shallow imaging depths, while 2P microscopy (2PM) and eventually 3P microscopy (3PM) are preferred as the imaging depth increases. Such a photon 'upmanship' [4] for deep tissue fluorescence imaging can be understood by considering the effective attenuation coefficient (αe) of the tissues for ballistic photons, the maximum allowable power, multiphoton cross sections, out-of-focus background fluorescence generation, and availability of fluorophores.

αe is a function of excitation wavelength and is the sum of the scattering coefficient (αs) and absorption coefficient (αa), i.e. αe = αs + αa. For in vivo imaging, the absorption of brain tissue is dominated by blood and water. Figure 1 shows the calculated and experimentally measured αe for mouse brain tissue in vivo [5–11]. Because the excitation power at the focus, P(z), decreases exponentially as a function of imaging depth z, P(z) = P0exp(−αe z), where P0 is the power at the brain surface; the value of αe is the most important consideration for deep imaging. Therefore, the preferred excitation wavelengths for deep tissue imaging reside within the long wavelength windows around 1300, 1700, and 2200 nm.

Figure 1. Absorption coefficient (red), scattering coefficient (blue) and effective attenuation coefficient (black) plotted as a function of wavelength. The scattering coefficient is calculated using the Mie theory for a tissue-like phantom solution of polystyrene beads at a concentration of 5.4 × 109 ml−1, which mimics scattering in the mouse cortex [5]. The absorption coefficient is the combined effect (sum) of water [10] and blood [11]. The blue dotted line is extrapolated for scattering below 667 nm by fitting an exponential model to the calculated scattering attenuation coefficient values between 667 and 1000 nm. The black dotted line reflects that extrapolated scattering data were used. Superimposed are green triangles which are experimental measurements of the mouse brain presented in [5–9]. The black bars below the graph indicate the ranges where 1P, 2P and 3P imaging are typically done, which are largely determined by the availability of fluorophores and the effective attenuation coefficient. The grey highlighted regions show the long wavelength windows for deep tissue imaging.

Download figure:

Standard image High-resolution imageWhile αe is the most important consideration for penetration depth, tissue absorption determines the maximum permissible power for imaging (i.e. the maximum value of P0) [7]. For the three spectral windows with the lowest αe, the absorption increases at the longer wavelength (figure 1). Although the values of αe around 1300 and 2200 nm are comparable, the 1300 nm window is preferred because of the higher allowable power due to the lower tissue absorption, while the low αe around 1700 nm indicates that the 1700 nm window is the best for the deepest imaging.

Most existing fluorophores require excitation wavelengths within the visible to near infrared (NIR) range, which limits 1P confocal imaging to excitations between about 350 and 700 nm, where αe is large. Thus, 1P confocal microscopy is generally best in shallow regions. While 2P and 3P excitation makes the low attenuation spectral windows compatible with existing fluorophores, only red or NIR fluorophores can be excited in the 1300 nm window by 2P excitation, excluding the most commonly used green and yellow fluorophores for deep tissue 2PM. 3P imaging can be performed within the 1300 nm spectral window for blue, green, and yellow fluorophores, and the 1700 nm spectral window for red and NIR fluorophores. The spectral 'gap' for 3P imaging is due to the large water absorption between 1400 and 1600 nm. The 2200 nm window, in addition to the high tissue absorption, is too long for nearly all existing fluorophores even with 3P excitation.

In addition to the practical considerations listed above, the imaging depth of three-dimensionally resolved imaging is fundamentally limited by out-of-focus background fluorescence [12]. In general, a higher-order nonlinear excitation will result in a higher excitation confinement and less out-of-focus background, resulting in a higher signal-to-background ratio (SBR). However, higher-order nonlinear excitation is less efficient due to the small multiphoton excitation cross sections. Therefore, the improvement in SBR comes at the expense of the signal strength. The trade-off between SBR and signal strength indicates that there is a depth for the fluorophore under consideration beyond which 3P imaging outperforms 2P imaging [7]. This depth can be quantified based on a metric grounded in detection theory (e.g. for the detection of calcium-transients with the d' metric [7, 13] or binary objects with the binary detection factor metric [14]).

Current and future challenges

The penetration depths of 1P confocal and 2P imaging are limited by the SBR (i.e. the depth limit). Neglecting the Stokes shift and the pinhole size, 1P confocal imaging has nearly the same point-spread function as 2PM. Therefore, the depth limit determined by the SBR is similar for 1P confocal and 2P imaging when the excitation wavelength is the same. Both theoretical and experimental studies show that the depth limit for 1P confocal and 2P imaging is between 1.5 and 2 mm, when imaging mouse brain vasculature, in the long wavelength windows of 1300 and 1700 nm [15–18]. The biggest challenge facing deep tissue long wavelength 1P confocal microscopy is the lack of IR dyes with excitation wavelengths >1200 nm. In addition, low-cost, low-noise, high-gain, high-quantum-efficiency detectors in the 1300 and 1700 nm windows must be developed. For long wavelength 2PM, the availability and performance of deep red and NIR functional indicators are major limitations for practical applications.

3P excitation best matches existing dyes with the low tissue attenuation windows, and 3P imaging depths of >1 mm and >2 mm have been demonstrated, respectively, for green/yellow fluorophores using 1300 nm excitation [19, 20] and red fluorophores using 1700 nm excitation [21]. The higher-order nonlinear excitation has also enabled 3PM to image deep in densely labeled samples or through a highly scattering layer (e.g. the mouse skull or corpus callosum [22]) where the imaging depth of long wavelength 2PM is severely limited by the SBR. Theoretical analysis and experimental studies on tissue phantoms show that 3PM has the potential to image much deeper than has been achieved so far. Indeed, the predicted maximum penetration depth limited by the SBR is about 3–4 mm for 3PM when imaging mouse brain vasculature, which is nearly twice the deepest imaging today [23]. The biggest challenge in pushing the imaging depth of 3PM is the small 3P cross section. Together with the maximum allowable power, small 3P cross sections limit the 3P signal strength and currently set the practical imaging depth limit.

Advances in science and technology to meet challenges

The depth limits of long wavelength 1P confocal and 2P imaging have already been reached in the mouse brain. However, further improvements are required to transform them into valuable practical tools for biological research. For deep tissue 1P confocal microscopy, the biggest advancement would be creating a plethora of fluorophores, fluorescent proteins, and functional indicators with excitation wavelengths >1200 nm. However, significant effort has been devoted to finding long wavelength fluorophores for in vivo imaging in the last 10–20 years, which has proven to be challenging, particularly for fluorophores excited with wavelengths >800 nm. Quantum dots (QDs), including carbon dots, are probably the most promising path so far but making QDs into robust functional indicators may yet prove difficult. Additionally, the recent development of superconducting nanowire detectors (SNDs) is promising for 1P confocal imaging at 1300 and 1700 nm [18]. While still expensive, advancements in materials and manufacturing for SNDs could reduce the cost and make these detectors affordable for biological imaging. Noticeable progress has been made in deep red or NIR fluorescent proteins and functional indicators for 2PM around 1300 nm. Further improvement in their performance will greatly improve the practical utility of long wavelength deep tissue 2PM and could make long wavelength 2PM of deep red or NIR fluorophores an alternative to 3PM of green fluorophores for many applications.

The depth limit of 3PM has not been reached in any in vivo biological samples and increasing the imaging depth of 3PM will require improving the signal strength. Fluorophores with enhanced multiphoton cross sections can increase the imaging depth of 2PM and 3PM and lower the cost of the excitation source. While past attempts to explore the molecular structures of fluorophores have largely failed to create new ones with extraordinarily large 2P or 3P cross sections for in vivo imaging, exploring resonance enhanced 3P excitation appears to be promising. Indeed, already approximately ten times enhanced 3P cross sections have been demonstrated [24]. Additionally, adaptive optics (AO), which is a well-established technique for improving the spatial resolution and increasing the signal generation for in vivo brain imaging [25, 26], may be considered. AO has been shown to have a larger impact in 3PM than in 2PM due to the higher-order nonlinear excitation and deeper imaging depth [27], where a 5–10 times signal strength increase can be achieved for deep 3PM [28]. One of the AO challenges is the lack of a fast and direct wavefront sensing method in deep scattering tissue.

For both 2PM and 3PM, a promising way forward is the development of high-pulse-energy, low-repetition-rate femtosecond lasers for deep imaging such as optical parametric chirped pulse amplifiers. This is because the pulse energy required by the laser increases exponentially as a function of imaging depth, and so the repetition rate of the laser must be reduced exponentially due to the limit on the maximum allowable power. The ideal laser for deep tissue imaging should provide constant output average power and a user-defined, tunable repetition rate. While lasers with high pulse energy and tunable repetition rate have become available for 2PM and 3PM in the last five years, such sources cannot yet maintain a constant output power as the repetition rate is tuned. Furthermore, by illuminating the regions of interest only, pulse-on-demand systems (e.g. the adaptive excitation source [29]) can increase the signal strength without increasing the average excitation power in the sample or requiring higher average laser output. Such adaptive lasers can improve the performance of deep tissue 2PM (e.g. imaging speed) and are likely to prove essential for reaching the depth limit for 3PM.

Concluding remarks

This roadmap aims to elucidate the challenges for high-spatial-resolution, deep tissue, three-dimensionally resolved fluorescence microscopy. The compatibility of the long wavelength windows and the availability of fluorophores, together with the trade-off between the SBR and the signal strength, form the basis for the current choices of 1P confocal microscopy, 2PM, and 3PM and the depth limit of each imaging modality. High-spatial-resolution fluorescence imaging in deep scattering tissue is challenging because the 'difficulty' grows exponentially as a function of imaging depth. While long wavelength multiphoton microscopy can image at >2 mm in the mouse brain, the imaging depth is still less than a quarter of that of an adult mouse brain in vivo. Future advancements in fluorophores, detectors, and lasers can perhaps push the imaging depth of three-dimensionally resolved fluorescence microscopy by another factor of two (e.g. 3–4 mm when imaging the mouse brain vasculature). Breakthrough innovations are needed to image much deeper than long wavelength multiphoton microscopy.

Acknowledgment

This work has been funded by a National Science Foundation (NSF) Grant (DBI-1707312).

2. Adaptive optical multiphoton fluorescence microscopy

Na Ji

University of California Berkeley, United States of America

Status

Adaptive optics (AO) was originally developed to combat atmospheric aberrations that degrade the image quality of astronomical objects. Here, wavefront distortion is measured directly using devices such as a Shack–Hartmann wavefront sensor. With increasing applications of optical microscopy in imaging of complex tissues, AO methods have been developed for microscopy to correct sample-induced aberrations in order to maintain optimal imaging performance [25]. Biological aberrations are distinct from their astronomical counterparts in that there is little to no temporal variation in the aberration profile but the samples are often optically opaque. Together, these characteristics have motivated the development of indirect wavefront sensing methods whose performance is not affected by light scattering.

Both direct and indirect wavefront sensing have been applied to multiphoton fluorescence microscopy (MPFM). The most popular and powerful method for imaging opaque samples is to measure the tissue-induced aberrations in the excitation light and then cancel them out by pre-shaping the excitation wavefront using a deformable mirror or spatial light modulator (SLM). To reduce scattering in direct wavefront sensing, far red and near infrared (NIR) fluorophores were employed [30, 31]. Indirect wavefront sensing methods use serial evaluations of image metrics (e.g. brightness, spatial resolution, contrast, point spread function) while manipulating the excitation light to deduce the wavefront profile [32–36]. When implemented properly, both types of methods are capable of forming a diffraction-limited focus deep in tissue to excite fluorescence at diffraction-limited spatial resolution.

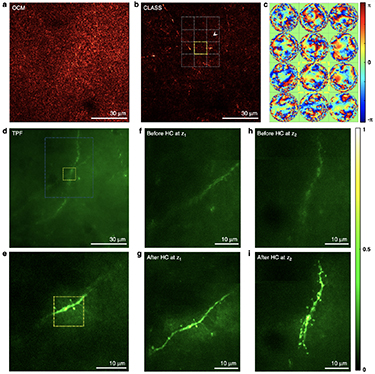

In the opaque mouse brain, AO has enabled MPFM to visualize subcellular structures such as dendrites and dendritic spines hundreds of micrometers below the brain surface (figure 2). It has also enabled biological discovery: using an AO-enabled two-photon fluorescence microscope, we characterized the input from the visual thalamus in the mouse visual cortex and discovered previously unknown orientation selectivity of their synapses [37]. The rich repertoire of AO technologies and extensive demonstrations of their capabilities have firmly established AO to be essential for high-resolution MPFM investigations of complex tissues at depth.

Figure 2. AO improves image quality of multiphoton fluorescence microscopy in the live mouse brain. (a) Two-photon fluorescence images of dendrites and dendritic spines before and after AO using direct wavefront sensing [31]. (b) Three-photon fluorescence images of a neuron, its dendrites, and dendritic spines before and after AO using indirect wavefront sensing [37].

Download figure:

Standard image High-resolution imageCurrent and future challenges

Because fluorophores emitting in the visible spectrum are most commonly used to probe biological processes, the requirement of introducing additional fluorophores with far red and NIR emission for direct wavefront sensing complicates sample preparation. The easiest way to introduce these far red/NIR fluorophores into brain tissues is by injecting them (typically chemical dyes) into the blood [31]. However, this approach may lead to corrections with smaller isoplanatic patches due to the high curvature of blood vessels and is not applicable to tissues devoid of vasculature. Indirect wavefront sensing methods can work with fluorophores in the visible spectrum, but the depth at which they can be applied in opaque tissues remains limited by scattering of the excitation light and the brightness of the fluorophores.

Currently, AO has largely remained the domain of physicists rather than biologists. One challenge, therefore, is how to maximize their impact on biological fields where optical microscopy is routinely applied to enable discovery. AO systems developed for telescopes in large observatories have in-house staff that ensure their optimal performance, allowing external users to benefit from the high resolution without requiring them to have optical expertise. However, there are no microscopy facilities that operate on a similarly large scale, with most biology laboratories having their own microscopes or relying on core facilities at their institutions. Integrating AO modules into existing commercial microscopes is hindered for both software- and hardware-related reasons. Many AO methods require access to software that controls the microscope, acquires data, and processes images, which, as provided by microscope manufacturers, are almost always proprietary and closed source. Typical commercial MPFMs are also designed without careful consideration of optical conjugation. For example, the galvos for 2D scanning of the excitation focus are usually not conjugated to each other. In this case, if the excitation light is shaped by a wavefront corrector (e.g. an SLM or deformable mirror) before the galvos, the corrective pattern would be in constant motion at the objective back pupil plane during scanning, reducing the effective area for AO correction. Placing the wavefront corrector in between the galvos and the objective would solve the motion problem, but requires physical access that is often unavailable. Finally, in commercial systems, the microscope objective is often placed with its back focal plane substantially offset from the plane conjugated to the wavefront sensing device, which can lead to similar performance degradation. Given the lack of commercially available adaptive optical microscopes, implementation of AO in laboratories that pursue biological inquiry has been limited to a few groups straddling optics and biology.

Advances in science and technology to meet challenges

Because longer wavelength light is less scattered by tissue, using excitation light of longer wavelengths (three- versus two-photon, e.g. 1.3 µm versus 0.9 µm excitation for green fluorophores) can increase the imaging depth in tissue (see previous section). At such large imaging depths, AO remains essential in achieving high spatial resolution. Because tissues often absorb more at these longer wavelengths, by increasing the focal intensity, AO enables the reduction of average excitation power and reduces heating-induced tissue damage.

Effort has also been put into developing far-red and NIR fluorescence proteins, which target cell types, biomolecules, and biological processes with much higher specificity than chemical dyes. For example, recently a NIR protein was developed to sense intracellular calcium concentration [38]. Although still inferior to visible fluorescent proteins in terms of brightness and photostability, continued efforts in engineering better NIR proteins could eventually allow them to provide both structural and functional information, as well as act as guidestars for direct wavefront sensing, substantially reducing the demand for sample preparation.

Due to the lack of commercially available microscopy systems, to maximize the impact of AO technologies, it is essential to reduce the complexity of their implementation both in terms of hardware and software. Direct wavefront sensing requires a sensor and a modulator of the wavefront, both of which need to be carefully calibrated and aligned. Therefore, for labs to integrate AO into their existing microscopes, indirect wavefront sensing techniques that utilize a single wavefront modulator can be more easily incorporated into the microscopy beam path. A standalone software module that can be operated independently of the microscope control program has also been developed [36], which should further lower the threshold of entry for biological laboratories.

Concluding remarks

By canceling out tissue-induced aberrations and recovering a diffraction-limited focus for multiphoton excitation, AO methods utilizing both direct and indirect wavefront sensing have led to drastic improvement of image quality of MPFM in complex tissues. Although their applications have yet to go much beyond demonstrations of physical principles, efforts have been made to improve the accessibility of these methods to non-experts. Together with the continued push to develop brighter fluorophores with longer wavelengths, AO will become an essential component of cutting-edge MPFMs in pushing the imaging depth for biological investigations at high spatial resolution.

Acknowledgment

This work is supported by the National Institutes of Health (U01NS118300).

3. Optical wavefront engineering for intravital fluorescence microscopy

Meng Cui

Electrical and Computer Engineering and Biology Department, Purdue University, United States of America

Status

Cellular resolution imaging in live biological systems holds great significance in biology and medicine [39]. Thanks to the rapid advance of genetic fluorescence function indicators, various cellular activities can be captured by optical measurement. However, a major challenge in applying optical measurement in live animals is the limited imaging depth as a result of the inhomogeneous refractive index of biological tissue [40–42]. Achieving in vivo large volume high-throughput 3D imaging remains a challenge in most animal models. Wavefront engineering has been explored to improve the performance of deep tissue imaging. First, tissue-induced light scattering and aberration is a reversible process. Proper engineering of the optical wavefront can correct optical aberrations and even suppress light scattering, which can improve the imaging spatial resolution and signal-to-noise ratio (SNR) [40–42]. Second, wavefront engineering can be employed to achieve 3D volumetric imaging [39]. Various devices can be employed to generate a defocusing wavefront which leads to axial control of the imaging plane. Third, wavefront engineering can be coupled with miniature invasive imaging probes to access extremely large depths [43]. Miniature imaging devices often have inherent aberration, which greatly reduces their imaging performance including resolution, field of view (FoV), and imaging throughput. Wavefront engineering can help assist miniature probe imaging to improve the overall imaging performance. Further advances in all three directions are expected to enable new observations and knowledge in biomedical research.

Current and future challenges

Cellular resolution functional imaging has been widely employed in biomedical research (figure 3). In immunology, intravital imaging has been used to track the motility and interactions of immune cells, which provides key information about the function and dynamics of various cell types [39]. In neuroscience, in vivo measurements are performed over a wide range of spatiotemporal scales. To study the neuronal plasticity related to development, learning, and memory, one needs to observe subtle morphological changes of neuronal structures at a sub-micrometer spatial resolution over days or weeks. To capture the neuronal activity during behavior, fluorescence images based on calcium or voltage indicators need to be recorded at a 10–1000 Hz frame rate with cellular or subcellular resolution. Spatial resolution, 3D signal confinement, and SNR are highly important for these measurements, which demand high-quality focus deep in biological tissue. Although the index of refraction of biological tissue is similar to that of water, the index of cellular components is slightly higher, which causes spatially varying wavefront distortion. Moreover, the movement of cellular structure and the trafficking of blood cells also cause temporal variation. To fully compensate for the wavefront distortion, we need to provide dynamic correction which also varies over space and time. As the cellular dynamics are inherently three-dimensional, we also need to capture the dynamics in 3D. For applications on awake animals, the motion of the animal also causes image instability. As a result, slow recording will suffer from motion artifacts. With high-speed 3D recording techniques, we can eliminate such artifacts through post-measurement image registration. To access very deep regions (e.g. several millimeters or more), miniature invasive imaging probes are commonly employed. However, the design and the dimension limit of these miniature lenses cause inevitable aberrations, which are also field-position-dependent. Routine applications often suffer from reduced resolution, SNR, FoV, and throughput. Advanced wavefront correction is needed to enable high-quality large volume imaging through these miniature imaging devices.

Figure 3. High-speed 3D volumetric imaging of a mouse brain. Cyan, microglia; orange, blood cell.

Download figure:

Standard image High-resolution imageAdvances in science and technology to meet challenges

To provide in vivo wavefront measurement, sensor-based and sensorless methods have both been developed. For multiphoton excitation, the excitation and emission wavelengths are far apart. The short wavelength emission suffers from much more severe aberration and scattering. Therefore, sensorless methods that modulate the excitation wavefront profiles are often preferred [40]. High-speed methods can achieve microsecond-level measurement time per spatial mode [44]. To correct for highly complex wavefront distortion, the iterative multi-photon adaptive compensation technique (IMPACT) has been developed [40], which leverages the iterative feedback and the inherent nonlinearity in multi-photon imaging to force the focus to converge to a diffraction-limited spot inside highly scattering biological tissue. In addition to high-resolution imaging inside the thick brain and lymph node tissue, IMPACT also enables high-resolution noninvasive transcranial imaging through intact mouse skulls [45]. For high-throughput large FoV imaging, the imaging system needs to provide simultaneous spatially varying aberration correction. Multi-pupil adaptive optics has been developed to achieve high-speed, high-resolution imaging [46]. Moreover, defocusing control can be applied to the desired region to achieve non-planar imaging such that the features of interest can be shifted to the same 2D recording plane for simultaneous fast recording. Toward fast 3D volumetric imaging, an optical phase-locked ultrasound lens has been explored to provide a microsecond-scale defocusing wavefront control [39]. Such capabilities can convert existing 2D raster scanning microscopes for fast 3D volumetric recording. An important technique for 3D laser scanning imaging is the remote focusing method [47], which relays a defocusing wavefront through a pair of objective lenses to the desired focal plane to rapidly shift the laser focus. The perfect operation of remote focusing demands perfect telecentric objective and relay lenses. However, perfect telecentricity is not the design goal of common objective lenses and is hardly achievable. To improve the imaging performance, an image plane adaptive correction method has been developed which can greatly extend the working range of remote focusing systems [48]. For imaging beyond several millimeters, miniature invasive probes are often used. The inherent aberration limits the resolution and accessible tissue volume. Recently, the clear optically matched panoramic access channel technique (COMPACT) has been developed, which provides two to three orders of magnitude increase in tissue access volume [43]. Combined with aberration correction, COMPACT can yield high-quality images over massive tissue volumes.

Concluding remarks

The ultimate goal of in vivo imaging is to noninvasively image deep inside live biological systems. Currently, the majority of the development is still to correct for the static slowly varying low-order aberrations. Although these developments lead to better resolution and SNR, the imaging depth advance is still moderate. Significant imaging depth increase can only happen if the wavefront correction can handle the high-order spatially varying dynamic wavefront distortion in live animals. Currently, none of the established methods is close to achieving this goal. Major innovations that can offer real-time high-speed wavefront measurement and correction are needed to break the current limit. An important aspect of tool development is the broad and routine adoption by the users, which requires the developed technique to be highly robust and easy to use. Without such capabilities, the development will likely have negligible impacts.

Acknowledgments

M C acknowledges the support by NIH Grants 1U01NS094341, U01NS107689, RF1MH120005, RF1MH1246611, U01NS118302, 1R01NS118330, R21EY032382, Purdue University, and the scientific equipment from HHMI.

4. High-speed wavefront shaping

Rafael Piestun

University of Colorado Boulder, United States of America

Status

Spatially modulating light at high speed is critical for the success of multiple optical techniques and applications. Early use of spatial light modulators (SLMs) in 3D holographic displays, optical signal processing, and pattern recognition was hampered by the lack of adequate SLMs at the time. From initial liquid crystal displays with low space-bandwidth product and poor phase modulation to photorefractives, a plethora of modulation techniques would require decades to mature to the level required for current deep imaging needs. Adaptive optics (AO) became practical early on through the use of mechanically deformable mirrors which, despite having a relatively small number of actuators, adapt effectively to the task of compensating for optical aberrations.

Most biological applications of wavefront shaping (WFS) require fast wavefront modulation, regardless of the specific technique used to calculate the compensating wavefront. In techniques that use iterative optimization, optical systems utilize feedback over multiple iterations to attain a wavefront that satisfies a target performance. Alternatively, in transmission matrix (TM) calculations, a large set of wavefronts needs to be projected and the respective outputs measured, ideally before the medium changes again. Similarly, in direct digital phase conjugation, the spatial modulation is calculated directly from direct measurements of the wavefront but latencies and dynamic changes of the medium are still an issue. Fast spatial modulation is critical in high-speed scanning through or inside a complex medium regardless of how the SLM pattern is obtained, including techniques based on digital phase conjugation, TM, or iterative optimization.

In live biological tissue, typical decorrelation timescales are of the order of milliseconds. However, the need for fast modulation techniques goes beyond biology, being a requirement in dynamic imaging, sensing and focusing, with implications in optical communications as well as quantum and nonlinearity control.

Recent demonstrations using micro-electro-mechanical systems (MEMSs) and acousto-optics have shown a path from the early modulation rates in the 10s of Hz to 100s of kHz. These experiments help motivate the development of larger and better SLM arrays, as well as the investigation into novel physical modulation mechanisms. In effect, current SLM constraints imply that speed is typically achieved at the expense of a lower number of degrees of freedom or a reduction in efficiency, if not both.

This section reviews recent progress in achieving high-speed WFS, requiring the adoption of new modulation mechanisms, as well as optical, electronic, and computation optimization.

Current and future challenges

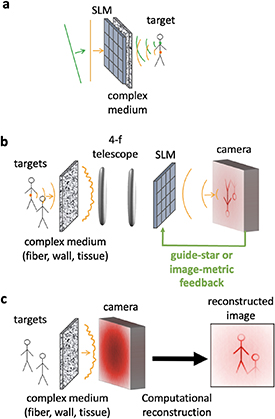

A general WFS problem, as depicted in figure 4, seeks to image through or inside a complex medium. These are defined as highly inhomogeneous media that generate multiple scattering events for any light propagating in their interior, hence scrambling the information to a degree that has been traditionally approached via statistical methods. This is the result of having a huge number of scatterers, with unknown locations and optical properties. Additionally, complicating the problem even further, these scatterers and the whole medium are dynamically changing.

Figure 4. A general wavefront shaping problem: coherent light is modulated by N independent degrees of freedom of a spatial light modulator (SLM) to attain a target metric that helps imaging through/within scattering media. A sensing mechanism (located on either side of the scatterer) provides feedback based on measurements that inform the state of the SLM. Reconstruction algorithms deliver the answer to the target imaging question.

Download figure:

Standard image High-resolution imageThe use of coherent laser light provides high-intensity sources that somehow mitigate the need to consider chromatic dispersion while enabling sensitive phase measurements. Notwithstanding, multispectral or short pulse generalizations have been considered.

Measurements are provided via a sensing mechanism such as photodetectors collecting excited fluorescence or acoustic transducers detecting photoacoustic signals. These measurements are used to inform and update the SLM state, typically multiple times as the complex medium changes or as part of a sequence of measurements to characterize the medium. The process continues with a series of measurements followed by a matched reconstruction algorithm to attain the target imaging task.

The principle of phase conjugation for the correction of distortions by inhomogeneous objects was recognized shortly after the invention of holography [49]. Nowadays, the concepts of holography, optical, digital, or computer-generated, provide a useful framework for understanding and devising techniques for imaging through highly inhomogeneous media.

The traditional optical holographic process is slow for most dynamic imaging situations. The advent of fast detector arrays, interfacing electronics, SLMs, and computers in combination with a new understanding of complex media and insights into computational imaging have opened opportunities for previously unthinkable imaging performance.

The ideal SLM, in particular, has a large number of pixels, number of phase levels, phase stroke, and diffraction efficiency, while simultaneously achieving fast response time, switching frequency and low latency. While progress in SLM technologies has been significant, the currently available spatial and temporal space bandwidths are far from what is needed when compared to the degrees of freedom of most complex media. Hence, managing tradeoffs is critical to advancing imaging applications.

Advances in science and technology to meet challenges

One approach to overcome current limitations is to use fast SLMs with a lower number of pixels and/or a lower number of states (e.g. binary), while compensating using holographic encoding. For instance, it is possible to use a binary-amplitude SLM such as the deformable mirror device in conjunction with computer generated holography concepts to achieve millisecond-scale TM measurements [50, 51]. The electronic implementation via dedicated hardware is critical to reduce latencies due to communication and computation [51].

Another possibility is to use the dimensionality transformation enabled by scattering media to take advantage of existing fast 1D modulators [52]. In effect, the actual arrangement of the input modes is almost irrelevant as long as the coupling to the output modes is strong enough. In this case, the scattering medium converts the light from each pixel of the 1D SLM into a 2D speckle pattern. Adjusting the phase of each pixel provides a means to exert control of the output light distribution in two or even three dimensions. Hence, 1D modulation (at 100s of kHz) provides opportunities to control light about three orders of magnitude faster than with liquid crystal SLMs [52].

A little-explored area is the control of nonlinear phenomena using WFS [53]. Nonlinear WFS imaging techniques, such as multi-photon excitation, Raman scattering, or second-harmonic generation, provide new means to interrogate different materials. High-speed modulation could also benefit emerging hybrid imaging modalities [54, 55].

Functional imaging of the brain, an imaging grand challenge, could help understand the neural pathways that trigger human brain function. The use of WFS in multimode fibers has enabled the thinnest endoscopes to functionally image the activity of live neurons [56]. Real-time imaging is critical to follow the neuron action potential patterns (figure 5), accessing the brain with cellular resolution at depths where non-invasive techniques cannot reach.

Figure 5. Structural and in vivo functional imaging with an ultrathin endoscope based on a multimode fiber and wavefront shaping. (Left) Imaging baby hamster kidney cells expressing eGFP. (Right) Hippocampal neuronal tissue culture expressing GCaMP6f; plots show the time signal of two neuronal cells compared with simultaneous measurements using traditional epi-fluorescence microscopy (from [56]).

Download figure:

Standard image High-resolution imageEmerging techniques also include the use of acousto-optic devices to control the phase via programmable RF signals that encode beams in the medium. This is followed by measurement of the phases of the scattered beams with a fast single-pixel detector. The acousto-optic deflector phase conjugates the beams and creates a spatio-temporal focus possibly scanned at high speed [57].

Concluding remarks

Progress in high-speed WFS is accelerating to such an extent that a description of all advances exceeds the scope of this article. While some ideas might take years to become practical, others are already having an impact by managing tradeoffs such as speed vs degrees of freedom or speed vs system complexity. It should be emphasized that most existing SLMs were not developed targeting WFS, so naturally, some of the current technological limitations (e.g. number of active elements in MEMS devices) are not fundamental in nature. Novel concepts are still needed, for instance with the adoption of tools such as machine learning or novel physical mechanisms for modulation. An intriguing development to watch is based on the use of the so-called active metasurfaces [58]. An outgrowth of subwavelength diffractive optics, they implement planar nano-structured arrays whose optical response can be dynamically tuned. The control is achieved by different physical mechanisms and material systems, including mechanical deformation, phase-change media, free-carrier density modulation, thermo-optic and electro-optics effects.

Acknowledgments

The author acknowledges support from the National Science Foundation (Award 1548924) and the Colorado Office of Economic Development and International Trade.

5. Imaging in complex media by scattered back-illumination

Jerome Mertz, Timothy Weber and Sheng Xiao

Department of Biomedical Engineering, Boston University, United States of America

Status

When imaging in complex media one must first be clear on what exactly one is interested in imaging. For example, in fluorescence imaging, one is interested in the spatial distribution of fluorescent markers. Complexity of the medium in this case can introduce aberrations that degrade image contrast and resolution. On the other hand, when performing label-free imaging, contrast generally arises from scattering, enabling structures of interest to be distinguished by their size. For example, one might wish to image small, point-like scatterers embedded in an aberrating medium of spatially varying refractive index (RI). Alternatively, one might be interested in reconstructing the RI variations themselves, whereupon point-like scatterers only undermine image quality by introducing multiple scattering. This last case can be of particular interest since a retrieval of the RI distribution can enable the application of adaptive optics (AO) to improve image quality.

However, obtaining a 3D image of the RI distribution in a complex medium is no easy task, particularly if the medium is so thick that it can only be accessed from one side. The difficulty comes from the fact that only sharp structures in the medium, such as point-like reflectors or interfaces, provide sufficiently high spatial frequencies to scatter light in the backward direction so that it can be detected. More slowly varying structures scatter light only in the forward direction, making them invisible to conventional epi-detection devices. Such is the case with optical coherence tomography (OCT), which can only reveal sharp sample structures, producing highly granular images. The retrieval of slowly varying structures must be inferred indirectly in this case, for example by considering their effects on the image granularity and solving an inverse problem to simultaneously reconstruct both high- and low-frequency structure [59, 60]. An alternative simpler approach, which is the subject of this roadmap, is to exploit the multiple scattering from deeper layers within the sample and use this to back-illuminate regions of interest in shallower layers. In this manner, contrast becomes based on light transmission rather than reflection, providing access to low-frequency sample structure. Such is the principle of oblique back-illumination microscopy (OBM) [61], depicted in figure 6, along with its scanning analog based on oblique back-detection (formally equivalent by virtue of Helmholtz reciprocity [62]). OBM has recently been shown to enable quantitative RI reconstruction in 3D [63].

Figure 6. Top: schematics of OBM and scanning OBM (adapted from [62]). Bottom: comparison of sub-surface OBM and en-face OCT images in mouse skin. Left: hair shafts. Middle: fat globules. Right: blood vessels (arrows indicate red blood cells). Scale bar: 10 µm.

Download figure:

Standard image High-resolution imageCurrent and future challenges

OBM is simple to implement, basically as an add-on to any conventional microscope; however, it does suffer from drawbacks. For one, while it allows imaging within arbitrarily thick, complex samples (indeed, complexity is required to obtain backscattering in the first place), it does not provide particularly deep imaging. For example, it does not attain the same penetration depths as OCT, despite the fact that both modalities are based on scattering. One reason for this is that OCT makes use of coherent illumination which, in turn, allows the possibility of interferometric time gating (or coherence gating) to reject background and improve contrast. Another reason is that OCT is based on ballistic light illumination and detection, which is easy to control (e.g. by spatial filtering). In contrast, OBM relies on diffuse backscattering, which is much more difficult to control.

Nevertheless, diffuse backscattering is not impossible to control. In particular, a remarkable phenomenon called the memory effect [2] prescribes that diffuse light obeys ballistic transmission or reflection laws even in thick complex media, but only within a very narrow angular range dependent on basic media properties (thickness in transmission, transport scattering length in reflection; see section 13). A hint as to how the memory effect can be exploited to improve OBM comes from the field of acoustical imaging [64], as shown in figure 7. Here, diffuse back-insonification, even though it is spatially incoherent, is controlled by the memory effect to enable essentially a coherent version of OBM based on measurements of differential phase rather than differential intensity. Because the back-insonification is in the transmission direction, it can reveal weak structures within the medium that are completely invisible to standard ultrasound imaging based on pulse-echo sonography (the acoustic equivalent of OCT). In addition, what can be loosely thought of as an acoustic version of scanning OBM has also been demonstrated, though with a more involved inversion-based image reconstruction algorithm where the memory effect is more implicit [65].

Figure 7. Left: principle of ultrasound differential phase contrast (DPC). A linear-array probe transmits pairs of plane-wave pulses of different tilt angles. The memory effect leads to a controlled translational shift of the scattered back-insonification from deeper layers, allowing differential phase imaging at shallower layers. Right: a phase inclusion invisible to conventional pulse-echo sonography becomes apparent with DPC. Scale bar: 10 mm. Adapted from [64].

Download figure:

Standard image High-resolution imageAdvances in science and technology to meet challenges

A feature of acoustic imaging compared to optical imaging is that the wave frequencies involved are orders of magnitude smaller (typically ∼8). As such, actuators are readily available that allow the coherent reception of acoustic waves where both amplitude and phase can be directly resolved, leading to the possibility of sub-cycle time gating. The same cannot be said of optical detectors, which are currently too slow to directly resolve the optical phase. This becomes important in applications involving the memory effect, since the range of this effect is known to increase when time gating becomes more refined [66]. It is for this reason that the memory effect can be easily exploited with acoustic OBM, enabling coherent differential phase detection, while it cannot with optical OBM, which to date has only been demonstrated with incoherent differential intensity detection.

Time-resolved imaging is, of course, possible and routinely performed with light, but attaining better than picosecond time resolution requires some kind of trick typically involving interferometry. For example, ultrafast pulsed lasers are readily available, enabling sub-picosecond time gating by interference with a pulsed reference wave. This principle is exploited in time-domain OCT. Alternatively, broadband spatially coherent sources are also readily available that allow the Fourier synthesis of a time gate, as exploited in frequency-domain OCT (and also in [66]). The application of such tricks to optical OBM may be envisaged, though perhaps more conceivably in its scanning configuration which involves only single-element detectors. However, the problem of engineering appropriate reference waves still remains. Much more straightforward would be a method of time gating that does not require interferometry, perhaps making use of a Kerr gate or, better still, by direct detection with an ultrafast detector. Advances in single-photon avalanche detectors, either in single element or in array form, may lead the way here. Indeed, the development of higher-speed devices enabling the direct coherent detection of light is one of the key technological advances that the optical imaging community is eagerly awaiting.

Concluding remarks

The purpose of this section is to highlight the possibility of indirectly rather than directly imaging structures within complex media by way of scattered back-illumination, leading to the possibility of transmission-based imaging with its attendant benefits, and allowing structures to be revealed that would normally be invisible. Scattered back-illumination can be controlled to a surprising degree of precision by way of the memory effect (at least in acoustics). Remarkably, this memory effect is far more general than utilized here [67], extending beyond spatial degrees of freedom to even polarization and spectral degrees. Such a generality opens the door to far richer contrast modalities than simple phase imaging as shown here, and promises to span a wide range of applications including biomedical, imaging around corners, lidar, sonar, seismology, and many more.

Acknowledgment

National Institutes of Health: R01CA182939, R21GM134216.

6. Guidestar-assisted wavefront shaping

Joshua Brake1,5, Zhongtao Cheng2,5, Roarke Horstmeyer3, Lihong Wang2,4 and Changhuei Yang4

1 Department of Engineering, Harvey Mudd College, Claremont, CA 91711, United States of America

2 Caltech Optical Imaging Laboratory, Andrew and Peggy Cherng Department of Medical Engineering, California Institute of Technology, Pasadena, CA 91106, United States of America

3 Department of Biomedical Engineering, Duke University, Durham, NC 27708, United States of America

4 Department of Electrical Engineering, California Institute of Technology, Pasadena, CA 91106, United States of America

5 Joshua Brake and Zhongtao Cheng contributed equally to this work.

Status

Focusing light efficiently into complex scattering media is significant for many applications, including optical imaging, manipulation, therapy, and stimulation. However, scattering media randomize the wavefront of an incident optical field, preventing the light from being easily focused as it would in free space. To overcome this challenge, guidestar-assisted wavefront shaping methods are being actively developed. The general principle of guidestar-assisted wavefront shaping is illustrated in figure 8(a). The guidestar, which is typically located at the desired focus spot inside a scattering medium, interacts with the scattered photons and encodes its location in the scattered light. The measurement system detects the exiting scattered light and identifies the components that originate from the guidestar's location. The system then determines a wavefront modulation map to present on a spatial light modulator (SLM) to tailor the wavefront of the incident laser. Various information from the guidestar can be used, such as the scattered wavefront itself (figure 8(b)) [68], wavefront variation induced by the guidestar (figure 8(c)) [69], the scattering point spread function (figure 8(d)) [35], or the total signal intensity from the guidestar (figure 8(e)). According to the specific measured quantity, the wavefront modulation map is updated iteratively to maximize the desired optical pattern (e.g. a focal spot) inside the scattering media, or directly determined by phase conjugation, to refocus light to the guidestar location.

Figure 8. State-of-the-art techniques for guidestar-assisted wavefront shaping. (a) General principle of guidestar-assisted wavefront shaping. (b) Ultrasonic guidestar: (b1) wavefront recording of the frequency-shifted photons from the ultrasound modulation through a phase conjugation mirror (PCM); (b2) generating the conjugated wavefront for optical focusing. (c) Dynamic guidestar: (c1)–(c2) wavefront recording of the scattered fields at two different states of the guidestar; (c3) generating the conjugated differential field for optical focusing. (d) Fluorescent guidestar. This panel shows the iterative measurement process for focusing on features of interest using the scattering point spread function in two-photon fluorescence microscopy with F-SHARP. (e) Photoacoustic guidestar. Emitted ultrasonic waves from a feature of interest are monitored to provide feedback for the wavefront shaping system.

Download figure:

Standard image High-resolution imageOne example of a popular guidestar mechanism is to generate new photons with a different frequency. Examples of this class of guidestar include nanoparticles which generate a nonlinear second−harmonic signal [70], focused ultrasound which generates frequency-shifted photons using the acousto-optic effect [68, 71], and fluorescent materials [35, 72]. Among these examples, focused ultrasound provides a noninvasive and freely addressable approach for focusing light into scattering media (figure 8(b)), which is more promising for general wavefront shaping applications. A related guidestar is the photoacoustic guidestar (figure 8(e)), which is detailed in section 7. A guidestar can also encode its location information by inducing wavefront variation, which is termed a dynamic guidestar. Dynamic guidestars can be physical, such as magnetic particles [73, 74] and microbubbles [75], or virtual, such as the adapted perturbation in samples [69] or perturbations induced by an ultrasound field [76]. Through detecting and conjugating the differential field of collected scattered light in two different states of a dynamic guidestar, an optical focus can be realized at the position where the wavefront variation originates inside the scattering medium (i.e. the dynamic guidestar location) (figure 8(c)). Since fluorescence is an important contrast mechanism in optical imaging, the fluorescence-based guidestar has intrinsic advantages for adoption in fluorescence microscopy. This type of approach has been previously demonstrated for adaptive optics correction with standard one-photon fluorescence [77] as well as two-photon (2P) excitation microscopy. As 2P excitation-based detection facilitates clear image formation deeper within tissue than standard fluorescence, the intrinsic guidestar approach has been integrated into several 2P systems as a promising means to extend imaging depths to 400 µm or more within biological tissue [78]. Existing approaches include an iterative SLM update technique [40] (iterative multi-photon adaptive compensation technique), a scanning-based approach for point-spread function estimation [35] (focus scanning holographic aberration probing, figure 8(d)), and a holographic phase stepping approach for rapid correction [79] (dynamic adaptive scattering compensation holography). Such techniques provide a promising means to jointly focus and rapidly scan out images at improved tissue depths with minimal additional hardware and in a noninvasive manner.

Current and future challenges

The major areas of development that can improve guidestar-assisted wavefront shaping fall into two main categories: (a) improvement of system latency to enable focusing deeper in dynamic scattering media and (b) development of new guidestar mechanisms to improve focusing performance and enable increased adoption. Solving these challenges will enable wavefront shaping to advance beyond experiments with carefully controlled and designed samples to more practical applications throughout biomedicine.

The latency between recording and playback in a wavefront shaping system is one of the most critical specifications for practical biomedical applications. The wavefront that focuses light to a desired location is valid only for a limited time due to biological motion such as blood flow. This timescale can be as long as several seconds in acute brain slices in vitro [80], but the decorrelation time at the same depth within in vivo specimens drops by three orders of magnitude to around 1 ms. Such fast decorrelation requires that the latency of a wavefront shaping system be on a timescale of milliseconds or less, which is challenging for most current techniques. In addition, for effective wavefront shaping into thicker samples, the control of additional degrees of freedom (i.e. additional pixels in the wavefront shaping device) is desirable, which places additional demands on system latency that must be considered.

The second main challenge is related to the guidestar mechanisms themselves. While many different guidestar mechanisms (such as those discussed previously) have been developed, they each have specific applications for which they are best suited. For example, one of the main advantages of the ultrasonic guidestar is that it is freely addressable and can be easily moved to target a desired focal location. However, it has a low modulation efficiency (defined as the percentage of light interacting with the guidestar location and subsequently 'tagged' with a different frequency), it is difficult to use in a reflection geometry due to its non-isotropic tagging behavior, and it is impacted by acoustic absorption, especially at higher ultrasound frequencies which are optimal for achieving high-resolution foci. As this one example indicates, further development of additional guidestar techniques or improvements to existing guidestars is necessary to achieve the goals of a freely addressable, high-resolution, efficient guidestar which is compatible with a reflection geometry and can access depths up to the optical absorption limit.

Advances in science and technology to meet challenges

Addressing the challenges of lower latency wavefront shaping systems and better guidestars requires a combination of technological development and the invention of new ways to leverage intrinsic or extrinsic signals within a sample for focusing.

Improving the latency of a wavefront shaping system can be accomplished by developing faster techniques and technologies for the various stages of the recording and playback process. The primary areas for improvement are (a) SLM response, (b) data transfer, (c) phase map calculation, and (d) guidestar integration.

The most widely used SLM technology is based on liquid crystal (LC) technology, which can be configured to control either the amplitude or phase of the wavefront. Digital micromirror devices are another popular technology that are several orders of magnitude faster than LC-based SLMs, but in their simplest configuration offer only binary amplitude control of a wavefront. While other novel SLM technologies based on acousto-optics or liquid light valves exist and offer even faster modulation, these methods are normally limited in terms of the number of modes they can control. Thus, they are limited in terms of their practical capabilities. State-of-the-art wavefront shaping systems leverage fast SLMs (e.g. digital micromirror devices) and optimized electronics (e.g. field programmable gate arrays FPGAs) to maximize the system throughput and minimize the latency between recording and playback.

When controlling many modes simultaneously, the data transfer requirements also quickly become non-trivial. A typical scientific CMOS camera contains 5 × 106 16 bit pixels, meaning that a single raw frame is on the order of 10 MB. Even using the fastest commercially available data links, maximum frame rates typically top out at 100 frames per second. The amount of data also impacts the time required to calculate the appropriate phase mask for playback. Using a phase shifting approach requires a few addition operations and a division per pixel, whereas more complicated measurement schemes such as those based on off-axis interferometers may require more involved computations such as Fourier transforms. Fortunately, many of these calculations can be highly optimized and parallelized using specialized hardware such as graphics processing units or custom digital hardware on FPGAs. Besides advances in hardware, computational methods are also emerging for facilitating wavefront shaping via reduced data acquisition, such as single-shot ultrasound-assisted optical focusing [81].

A promising approach toward improving the system latency is an integrated sensor and wavefront shaping architecture that combines the functionality of an image sensor and an SLM into a single device with some basic computation capabilities provided in each pixel [82]. In this device, each pixel can capture and compute its playback phase in parallel, thus eliminating the need to transfer data. Using a micromirror-based architecture for the wavefront-shaping elements will provide low-latency shaping capabilities. Furthermore, this architecture can solve the alignment challenges of time-reversal-based wavefront shaping systems by co-locating the sensing and shaping elements.

To deal with the low signal-to-noise ratio of the ultrasonic guidestar, multiple iterative measurements of the phase map can be made to improve the focus peak-to-background ratio. However, each of these iterations requires a recording and playback cycle and thus can significantly increase the effective wavefront shaping system latency. Other guidestars, such as magnetic particles that can provide higher wavefront modulation efficiency, are better in this respect since they can provide higher signal-to-noise ratios at the same focusing depth. In summary, each guidestar technology comes with its own tradeoffs and benefits, and balancing these tradeoffs for a particular application is critical.

Concluding remarks

Guidestar-assisted wavefront shaping is an attractive technique for deep imaging and efficient delivery of light energy beyond the diffusion limit. Although the system latency and guidestar mechanism of current wavefront shaping technologies are still the main limiting factors for more practical biomedical applications, advances in science and technology are showing great potential to gradually resolve or alleviate these limitations. The rapid evolution of semiconductor and LC technologies for optical sensors and modulators will enable high-speed and high-resolution wavefront sensing and control in the future. Meanwhile, advanced computational methods are being actively developed for more efficient information extraction to accelerate wavefront shaping. The continued development of these techniques offers the potential for guidestar-assisted wavefront shaping to expand the optical imaging capabilities of scientists both in the laboratory and clinic.

7. Imaging at depth with linear feedback

Hilton B de Aguiar and Sylvain Gigan

Laboratoire Kastler Brossel, ENS-Université PSL, CNRS, Sorbonne Université, Collège de France, 24 rue Lhomond, 75005 Paris, France

Status

High-resolution noninvasive imaging at depth is currently performed mostly by wavefront shaping to compensate for scattering, be it by optimization, phase conjugation, or transmission matrices approaches [83] (see also sections 8–10). To be noninvasive, these methods require a 'guidestar' mechanism. Besides acoustic-based methods, all require a nonlinear feedback mechanism, for instance second−harmonic signal, or multiphoton fluorescence in order to converge to a single-grain focus, typically diffraction-limited [84] (see also section 5). Even acoustic methods benefit from nonlinearities, allowing to improve the resolution close to the optical diffraction limit. Linear feedback is conventionally considered not to be feasible because of the impossibility to converge to a focus from an extended object. In parallel, algorithmic tools have been introduced and become very popular for retrieving hidden fluorescent objects thanks to the memory effect. Nevertheless, they remain limited in size and complexity of the object. However, linear contrasts, such as fluorescence and Raman, are extremely important in biomedical imaging: they are the easiest and cheapest to implement, are extremely widespread in life sciences and medicine, and, particularly for fluorescence, provide an unprecedented level of signal (compared to its nonlinear counterpart). Reaching deep imaging using such linear incoherent feedback is therefore an important goal for the field.

In this section, we want to cover a few computational strategies, allowing retrieving objects with linear incoherent contrast, such as fluorescence and spontaneous Raman scattering.

Current and future challenges

Deep imaging has mostly been done exploiting nonlinear feedback for wavefront shaping experiments. Soon after the seminal work from Mosk's group, it was realized that a feedback signal for single-grain focusing within the scattering medium was far from simple. Hence, the field has drastically focused on nonlinear signals as a feedback mechanism due to its ability to converge to a single focus in wavefront shaping experiments, as seen for instance very recently [85]. However, nonlinear processes are less popular in science and engineering due to the cost associated with the hardware (mostly the laser source).

With the emergence of the Big Data era, computational microscopy has brought new directions to the wavefront shaping toolbox. In particular, computational tools exploit other properties of scattering—that may allow for unique single-grain focusing in wavefront shaping experiments or retrieve an image without resorting to wavefront shaping—using linear optical processes as readout, in particular fluorescence. A first set of computational tools has recently been put forward using variance-based methods using linear fluorescence and Raman signals [86–88]: variance calculations can be seen as a 'nonlinear metric,' therefore allowing for focusing convergence in wavefront shaping experiments. However, imaging is only possible with focus-scanning techniques, and hence is time consuming. More recently [89] has solved this issue by combining wavefront shaping with computational modeling and algorithm tools allowing for imaging without focus-scanning methodologies. Indeed, most recent results have shown that costly wavefront shapers are actually not needed: reading out linear fluorescence excited by a set of speckle excitation, combined with computational microscopy tools, allows for image recovery at depth [90]. Along the same lines, one may achieve super-resolution capabilities within scattering media by exploiting computational tools without resorting to wavefront shaping hardware [91]. These examples highlight the wealth of information that the incoherent properties of linear fluorescence allow for deep imaging.

While conventional wisdom forces us to think that actual imaging is necessary, the randomness embedded in the speckle allows for imageless retrieval of precious information at depth. For instance, in [92] demixing algorithms were used to retrieve the temporal activity of neurons (fluctuations of fluorescence), without the need to modulate the illumination (figure 9).

Figure 9. An example of fluorescence demixing in scattering media, here for functional activity. A DMD is used to rapidly excite fluorescent beads to mimic neurons firing. The resulting low contrast fluorescent patterns are collected through a skull bone (highly scattering) and can be demixed thanks to a non-negative matrix factorization algorithm; hence the activity (but not the location of the neuron) can be retrieved (adapted from [92]).

Download figure:

Standard image High-resolution imageAdvances in science and technology to meet challenges

These recent outcomes exploiting computational tools highlight a fertile field ahead. Several of these successful demonstrations rely on the fact that fluorescence is an incoherent effect and often sparse. While these aspects are embedded in many off-the-shelf algorithms, going beyond the incoherent assumption and low sparsity object in computational tools are certainly new challenges to tackle.

As we have learned [83], correlations in multiple scattering help in convergence in the image retrieval process. Up to now, mostly spatial correlations have been used (i.e. spatial memory effect). Other correlation properties are known, such as spectral (see below) and polarization [93], and could also be exploited in future computational tools.

Despite the fact that fluorescence is the major incoherent contrast mechanism in wavefront shaping methods, there is still much to be done with Raman scattering, another popular contrast method with molecular selectivity. Unlike fluorescence, the main challenge in Raman scattering is its weak signals that deteriorate the performance of any method. Nevertheless, there is richness in the Raman spectrum that is still to be unveiled as only very recently the spectral information embedded in the Raman signal has been exploited. To date, only one report has shown noninvasive focusing using highly sparse samples (therefore focusing convergence is guaranteed) [94], highlighting the need to develop tools for deep Raman imaging, although recent work has shown that variance-based computational methods allow for 'chemical' focusing using wavefront shaping [88]. Finally, speckles have been used to enable super-resolution in Raman-based processes [95], opening another research venue for exploiting computational tools in deep imaging.

Concluding remarks

To conclude, linear feedback mechanisms have originally been seen as a no-go for single-grain focusing in wavefront shaping experiments. Nevertheless, they have recently been re-analyzed, with advances in computational tools showing that there is still a lot to be exploited from these well-established and popular microscopy contrasts, therefore opening important perspectives for deep imaging. Beyond the incoherent contrasts discussed here, coherent mechanisms (in particular Raman) would also open important new applications. The computational tools developed so far have been shown to be very useful, but modern machine learning tools, in particular physics-informed neural networks (see sections 11 and 16), may also prove extremely powerful and versatile.

Acknowledgments

This research has been funded by the FET-Open (Dynamic-863203) and European Research Council ERC Consolidator (SMARTIES-724473) grants.

8. Photoacoustic-guided optical wavefront shaping

Emmanuel Bossy1 and Thomas Chaigne2

1 University Grenoble Alpes, CNRS, LIPhy, 38000 Grenoble, France

2 Aix Marseille University, CNRS, Centrale Marseille, Institut Fresnel, F-13013 Marseille, France

Status

In an ongoing effort to develop optical imaging techniques that can reach large depths into scattering biological tissue, photoacoustic imaging stands out due to its unique capabilities. Relying on the emission of an ultrasonic wave upon the absorption of a pulsed illumination, this modality can be used in a variety of optical excitation and acoustic detection schemes. Depending on the acoustic frequency content of the detected signal, the geometry of the detector and the optical illumination system, various regimes can be explored, with a typical depth to resolution ratio of about 200.

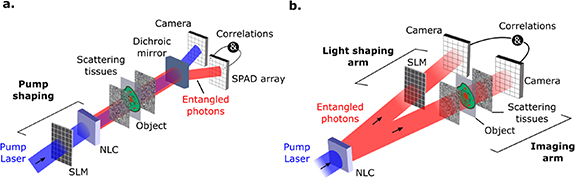

The imaging resolution depends on the depth and scattering regime, and one can distinguish two main categories: below a few scattering mean free paths, it is possible to focus the optical illumination beam, and thus to achieve optical resolution. Beyond this limit, the illumination light is multiply scattered and diffuse, and, without scattering compensation, the resolution is set by the acoustic detection bandwidth, higher frequencies providing a better resolution. The depth–resolution tradeoff is then ultimately set by the increasing attenuation of ultrasound with frequency (typically ∼1 dB cm−1 MHz−1 for biological tissue) and the maximum optical energy that biological tissue can receive without damage. Typically, photoacoustic imaging of biological tissue can provide maps of light absorption with a resolution of about 100 µm at a depth of around 2 cm.