Abstract

Learning from successful applications of methods originating in statistical mechanics, complex systems science, or information theory in one scientific field (e.g., atmospheric physics or climatology) can provide important insights or conceptual ideas for other areas (e.g., space sciences) or even stimulate new research questions and approaches. For instance, quantification and attribution of dynamical complexity in output time series of nonlinear dynamical systems is a key challenge across scientific disciplines. Especially in the field of space physics, an early and accurate detection of characteristic dissimilarity between normal and abnormal states (e.g., pre-storm activity vs. magnetic storms) has the potential to vastly improve space weather diagnosis and, consequently, the mitigation of space weather hazards.

This review provides a systematic overview on existing nonlinear dynamical systems-based methodologies along with key results of their previous applications in a space physics context, which particularly illustrates how complementary modern complex systems approaches have recently shaped our understanding of nonlinear magnetospheric variability. The rising number of corresponding studies demonstrates that the multiplicity of nonlinear time series analysis methods developed during the last decades offers great potentials for uncovering relevant yet complex processes interlinking different geospace subsystems, variables and spatiotemporal scales.

Similar content being viewed by others

1 Introduction

Solar coronal mass ejections (CMEs) colliding at high speeds of up to 3000 km/s with the Earth’s magnetosphere can cause extremely intense magnetic storms and sudden magnetic field changes (and electric field pulses) both in the magnetosphere and at the Earth’s surface. Tsurutani and Lakhina (2014) found that a “perfect” interplanetary CME (ICME) could create a magnetic storm with intensity up to the saturation limit, with the disturbance storm-time index \((\mathrm{Dst}) \sim -2500\text{ nT}\), a value greater than the one estimated for the famous Carrington storm. The interplanetary shock would arrive at Earth within ∼12 h with a magnetosonic Mach number ∼45, comparable to astrophysical shocks. Moreover, the associated magnetospheric electric field would form a new relativistic electron radiation belt. Eventually, the dayside and nightside shock-related auroral events might cause geomagnetically induced currents (GICs) at the surface of the Earth, which in turn could lead to the collapse of power systems over large geographical areas. Recently, Welling et al. (2021) used the estimates provided by Tsurutani and Lakhina (2014) to drive a coupled magnetohydrodynamic-ring current-ionosphere model of geospace to obtain more physically accurate estimates of the geospace response to such an event. The new result regarding GIC levels exceeds values observed during historic extreme events, including the March 1989 event that brought down the Hydro-Québec power grid in eastern Canada. Such an extreme space weather scenario illustrates, on the one hand, the need for reliable operational space weather prediction schemes but also, on the other hand, the necessity to understand the underlying physical mechanisms that correspond to an open spatially extended nonequilibrium (input-output) dynamical system, as is the Earth’s magnetosphere (Consolini et al. 2008; Balasis et al. 2009).

The solar wind-magnetosphere system has been shown to be nonlinear (e.g., Tsurutani et al. 1990; Johnson and Wing 2005; Reeves et al. 2011; Wing et al. 2016 and references therein). Indeed, since the early 1990s it was shown how the dynamics of the Earth’s magnetosphere-ionosphere system in response to the changes of the interplanetary medium and to the solar wind displays a nonlinear, chaotic and near-criticality (avalanche) dynamics especially during magnetospheric substorms (e.g., Chang 1992, 1998, 1999; Klimas et al. 1996; Consolini et al. 1996; Consolini 1997; Uritsky and Pudovkin 1998; Lui et al. 2000; Uritsky et al. 2002). In particular, in Consolini et al. (1996) evidenced that the character of the magnetospheric dynamics as monitored by the auroral electrojet (AE) index cannot be simply assumed to be low-dimensional, showing, indeed, multifractal features similar to intermittent turbulence. Later, analyses on the busty-dynamics and on the self-similarity features of geomagnetic indices and auroral images (Consolini 1997; Uritsky and Pudovkin 1998; Chapman et al. 1998; Lui et al. 2000; Klimas et al. 2000; Uritsky et al. 2002) suggested that the magnetospheric dynamics display features similar to those of a non-equilibrium system near a critical point of the dynamics (see also, Chang 1999; Consolini 2002). In this framework, the magnetospheric substorms, which are among the main manifestations of the solar wind-magnetosphere-ionosphere coupling, were associated with the occurrence of non-equilibrium dynamical phase transitions, mainly involving the plasma confined in the central plasma sheet (CPS) region of the near-Earth magnetotail (Sitnov et al. 2000, 2001; Sharma et al. 2001) – a region which is characterized by turbulence and a complex dynamics (Borovsky et al. 1997; Borovsky and Funsten 2003). Nowadays, the dynamics of the Earth’s magnetosphere is regarded as an open spatially extended non-equilibrium system displaying dynamical complexity and self-organization (Klimas et al. 2000; Consolini et al. 2008; Borovsky and Valdivia 2018). The term dynamical complexity refers to a specific quantifiable physical property and has not to be read as a jargon to refer to fascinating complicated phenomena. According to Chang et al. (2006) dynamical complexity is a phenomenon deeply rooted in the nature that emerges in nonlinear interacting systems, when a multitude of structures/subsystems at different spatio-temporal scales are formed and interact generating a pseudo-stochastic dynamics. Furthermore, a peculiar feature of dynamical complexity is that the evolution laws of the global system cannot be directly surmised from the elemental laws that regulate the dynamics of the single parts. In this framework, the Earth’s magnetosphere-ionosphere system, which consists of several nonlinearly interacting plasma regions and, inside of each of these regions, of different physical structures at different spatio-temporal scales, can display dynamical complexity in response to solar wind and interplanetary medium changes.

Dynamical complexity detection for output time series of complex systems is one of the foremost problems in many fields of science. Especially in geomagnetism and magnetospheric physics, accurate detection of the dissimilarity between normal and abnormal states (e.g., pre-storm activity and magnetic storms) has the potential to vastly improve space weather diagnosis and, consequently, support the mitigation of space weather hazards. Several studies have reported advances in this context by applying complex systems tools like scaling exponents, entropies, functional network analysis, or recurrence analysis to different types of observational data including geomagnetic indices, space and ground magnetometers (Balasis et al. 2006, 2008, 2009, 2011a, 2013, 2018, 2020; Consolini et al. 2021; De Michelis and Consolini 2015; De Michelis et al. 2016, 2017a, 2020, 2021; Dods et al. 2015, 2017; Donner and Balasis 2013; Donner et al. 2018, 2019; Papadimitriou et al. 2020; Tindale and Chapman 2016; Wing et al. 2022).

Herein we present specific aspects of geomagnetic variability (in both time and space) that have already been successfully addressed with complex systems methods. By utilizing a variety of complementary modern complex systems-based approaches, an entirely novel view on nonlinear magnetospheric variability is obtained. For example, nonlinear measures based on the analysis of recurrences of previous states (Marwan et al. 2007; Donner et al. 2011a, 2011b) have been successfully applied to studying low-latitude geomagnetic indices Dst and SYM-H along with characteristic variables of the solar wind (Donner et al. 2018, 2019; Alberti et al. 2020b). Specifically, the time-dependent coupling between the solar wind and the magnetosphere along with the relationship between magnetic storms and magnetospheric substorms is of paramount importance for space weather processes. However, the storm/substorm relationship is one of the most controversial aspects of magnetospheric dynamics (Gonzalez et al. 1994; Kamide et al. 1998; Sharma et al. 2003; Daglis et al. 2003). In order to further disentangle this relationship and the role of relevant solar wind variables as drivers and mediators, multivariate causality measures employing the concept of graphical models constitute one particularly promising tool (Runge et al. 2015, 2019a). Toward this goal, in a recent article Runge et al. (2018) highlighted the significant potential of combining a causal discovery algorithm with a conditional independence test based on conditional mutual information for tackling contemporary research questions in magnetospheric physics, such as the storm-substorm relationship. Accounting for possible interactions between processes at different temporal scales, the aforementioned approaches can be combined with a recently developed framework for studying cross-scale interdependencies in complex dynamics (Paluš 2014).

Taken together, the multiplicity of recently developed approaches in the field of nonlinear time series analysis offers great potential for uncovering relevant yet complex processes interlinking different geospace subsystems, variables and spatio-temporal scales. Nowadays, information science and dynamical systems theory play a fundamental role in understanding and predicting the behaviour of the coupled solar-terrestrial system (Baker 2020). Therefore, this review provides a first-time systematic overview of relevant complex systems-based techniques and their applicability in the context of geomagnetic variability. Section 2 presents some particularly valuable concepts of dynamical systems theory, while Sects. 3 and 4 concentrate on substorm and storm research, respectively. Section 5 deals with the solar wind driving of radiation belt dynamics, while Sect. 6 provides a perspective on how systems science methodology can be further used to advance physical understanding of the near-Earth electromagnetic environment. The review concludes with an outlook.

2 Dynamical Systems Theory: Core Concepts and Their Practical Application

2.1 Dynamical Systems Theory, Statistical Physics and Chaos

Dynamical Systems Theory originated in Newtonian mechanics and has emerged as a universal approach to investigate the time evolution of a vast range of systems in physics, chemistry, biology, engineering and economy (Strogatz 2018; Contopoulos 2002), to name just a few. In general, a set of variables, each denoting a particular property, characterizes the instantaneous behaviour of the system. For example, a simple pendulum can be fully described by two parameters, its angular position and angular velocity. This set of variables represents the state of the system and the total number of such variables is called the number of degrees of freedom. All possible states of a system construct the state space, the dimension of which is equal to the number of degrees of freedom. In fact, at every time step, each state is represented by a point in the state space and its temporal evolution can be viewed as a sequence of states in this space, called the system trajectory. In many cases, the trajectory brings the system into a particular state or a set of states (a region in the state space) in long time, known as the attractor of the system. An attractor can be a fixed point, in which the system reaches an equilibrium state, or a limit cycle indicating a final stable oscillatory situation. However, sometimes the system can also end in a non-oscillatory motion within a complex-shaped set in the state space known as a strange attractor. Such systems are locally unstable yet globally stable.

The most common way to describe how the system state evolves is a set of continuous or discrete differential equations (also distinguished by the nomenclature of differential and difference equations, respectively), based on the governing laws among system variables. This also requires knowing the initial conditions (state) of the system. In principle, given the initial conditions (present state), one can predict the long-time behaviour (future states) of the system, deterministically. However, this may only be feasible for low-dimensional cases, i.e., systems with small numbers of degrees of freedom. In fact, in high dimensional systems with large numbers of degrees of freedom, like almost all real-world systems, one cannot solve such high dimensional equations of motions in practice.

One of the most successful theoretical frameworks of physics that deals with such high dimensional cases is statistical mechanics. Indeed, due to the incomplete knowledge of the microscopic world there should be some uncertainty in the system behaviour, thus bringing probability theory and statistics as well as entropy as a generic concept to quantify this uncertainty to the front. As a consequence, one can describe the (average) macroscopic behaviour of the system in terms of microscopic properties. In this approach, instead of working with a single state, one deals with a large number of copies of the system in its different possible states, referred to as a statistical ensemble of the system. This probabilistic point of view has helped us to investigate and understand a multitude of phenomena in nature, from the behaviour of elementary particles (Kardar 2007) to Earth and space sciences (Consolini et al. 2008; Balasis et al. 2013; Livadiotis 2018) and beyond.

Note that many concepts introduced in statistical physics are only applicable in equilibrium situations, in which the statistical ensembles are time independent. However, almost all real-world systems are changing over time, and exchanging mass and energy with their environment, i.e., they are open systems. Although non-equilibrium statistical physics can deal with many non-equilibrium systems, still many natural systems are far beyond the domain of non-equilibrium statistical physics. The seminal works of Einstein (Einstein 1905) and Langevin (Langevin 1908) on Brownian Motion introduced another probabilistic approach to overcome the high dimensionality of the system, especially in non-equilibrium situations. In this methodology, one focuses only on a few relevant and useful variables, and considers the effect of all other unknown dynamical variables as a noise, i.e. a stochastic term, and implements this into differential equations among those small number of variables. These types of equations are called stochastic differential equations and have found their applications in many research fields including physics, engineering, economics and finance, biology and medicine (Mao 2007). Such a stochastic point of view implies that any prediction about the system’s future evolution is also probabilistic.

The main problem here is that we usually do not know the noise term and hence need to make some assumptions about the nature of the noise. The validity of our assumptions usually can be verified by the comparison between our predictions and the experimental observations. Another possible source of stochasticity is the noise that arises from inaccurate measurements. In such cases, we can also implement the role of such observational noises into the corresponding dynamical equations, as mentioned above. Note that, instead of solving the stochastic dynamical equations between different system parameters, one can study the evolution of the probability density function of those parameters, by solving a deterministic differential equation, called Fokker-Planck equation (Kadanoff 2000) or, more generally, the Chapman-Kolmogorov equation (Kolmogorov 1938). This means that, the stochasticity aspect of the dynamics is inherited in the probability density that can give us remarkable information about the system dynamics, though in most nonlinear problems solving the Chapman-Kolmogorov or Fokker-Planck equation is almost impossible and one can only approximate the probability density, at best. This kind of stochastic approach has already found application in a broad range of research areas, such as climate modeling (Hasselmann 1976) and space physics (Subbotin et al. 2010; Noble and Wheatland 2012).

On the other hand, the lack of precise knowledge about the initial conditions can be another source of (apparent) stochasticity in the system’s behaviour, and thus be the source of unpredictability even in low-dimensional deterministic systems. This arises from the high sensitivity of the system dynamics to its initial conditions, in which a small uncertainty in the initial states, such as those due to errors in measurements or rounding errors in numerical calculations, often grow exponentially with time. This divergence of initially close trajectories in long time can be characterized by a quantity called Lyapunov exponent that measures the rate of this separation. This divergence means that the long-time behaviour of the system is unpredictable in general without the presence of any stochastic elements in its dynamics. Such behaviour is characteristic of nonlinear dynamical systems and classified as chaotic behaviour in which the system eventually reaches a strange attractor. Many physical, social, biological and economic systems are examples of such chaotic behaviour (Tsonis 1992), with many applications in climate and space sciences (Chen and Palmadesso 1986; Crosson and Binder 2009). Although many systems have been identified as examples of low-dimensional deterministic chaos, using various methods which estimate chaos-based parameters like dimension, number of degrees of freedom and Lyapunov exponents from experimental time series (Nicolis and Nicolis 1984; Fraedrich 1986; Tsonis and Elsner 1988), however, many of these findings were challenged. For instance, it has been shown in atmospheric dynamics that the reliability of such chaos-identification algorithms is limited and the observed low-dimensional weather or climate attractors have been considered as spurious results (Grassberger 1986; Lorenz 1991; Paluš and Novotná 1994). It asserts that the application of chaos-based measures like fractal dimensions or Lyapunov exponent can be unreliable in high dimensional or stochastic processes. After the critical re-evaluation of concepts based on purely deterministic systems, various types of information-theoretic measures have been proposed.

In the following, we will detail some fundamental concepts of dynamical systems theory and nonlinear time series analysis that have recently proven useful for gaining deeper insights into the nonlinear dynamical characteristics of the near-Earth electromagnetic environment.

2.2 Stochastic Time Series Properties: Long-Range Dependence, Fractals and Multi-Fractals

Long-range dependence is a wide-spread feature of real-world dynamical systems. By definition, it is associated with a linear time series analysis concept, the characteristic behaviour of a signal’s autocorrelation function (exhibiting power-law decay for many real-world geophysical systems) or, equivalently, the associated power spectral density (also displaying a characteristic power-law scaling), both of which are intimately related via a simple Fourier transform. While the scaling behaviour of the autocorrelation function is generally hard to identify for time series of limited length, the corresponding behaviour of the spectral density provides an easy and robust means to estimating a characteristic quantity, the power spectral exponent \(\beta \). This exponent is intimately related to another classical measure of long-range dependence, the celebrated Hurst exponent \(H\) originally introduced by the British hydrologist E.E. Hurst in the 1950s, via \(\beta = 2H+ 1\). In the context of river discharges, it has been argued that long-range dependence is naturally generated when superimposing various “microscopic” (stochastic) processes with heterogeneous parameters, or, alternatively, by performing a fractional-order integration of an input signal as a kind of nonlinear filter. Besides the power spectral density, various types of other nonlinear scaling characteristics have been suggested for estimating \(H\), including rescaled-range and detrended fluctuation analysis as two widely employed examples. A systematic inter-comparison between the three aforementioned techniques can be found in Witt and Malamud (2013).

The observation of long-range dependence in stochastic processes is related to a statistical self-similarity property of the time series graph, which is characterized by another scaling exponent measuring the roughness of this object in two-dimensional space as the scale of resolution is successively varied. Estimators of this scaling exponent quantify a fractal dimension \(D\) from a univariate stochastic process perspective, since their definition relies on fundamental concepts from fractal geometry. In the case of self-similar time series and the absence of heavy-tailed probability distribution functions, one finds another straightforward relationship between fractal dimension and Hurst exponent as \(D + H = 2\). There exist various approaches for estimating \(D\) from a univariate time series, including box-counting approaches, the Higuchi fractal dimension (Higuchi 1988), and Katz fractal dimension (Katz 1988), to mention only a few common examples. It should be noted, however, that the concept of a fractal dimension of the time series graphs, although rooted in fractal geometry, is intimately related with the linear time series property of power spectral density, and hence may not necessarily provide further insights into the studied signal beyond the latter. In essence, estimates of the Hurst exponent and the fractal dimension of the time series graph can be used interchangeably as long as there are no further complications like heavy-tailed probability distributions as commonly associated to intermittency. By contrast, in case of intermittent signals often encountered in space physics, both concepts actually provide complementary information.

2.3 Phase Space Based Methods

The aforementioned concepts of univariate time series analysis consider an observational time series as a unique source of information whose statistical properties exhaustively characterize the system under study, which however will typically be characterized by far more degrees of freedom than just one variable. In order to account for this fact, the concept of attractor reconstruction by embedding has become a core approach in dynamical system theory. Rooted in deep mathematical arguments, embedding theorems imply that under relatively general conditions, the action of unobserved variables of deterministic dynamical systems can be qualitatively reconstructed by deriving independent coordinates from a univariate time series, which are most commonly provided in terms of so-called delay coordinates, i.e. exact time-shifted replications of the original data sequence (Takens 1981). Accordingly, an \(m\)-dimensional state vector \(X\) can be reconstructed from scalar time series \(x ( t )\) as \(X ( t ) =[x ( t ),x ( t-\eta ),\dots ,x ( t- ( m-1 ) \eta ) ]\), where \(\eta \) is the backward time-lag that can, for example, be estimated from the first minimum of the mutual information (Fraser and Swinney 1986). In general, this time shift (embedding delay) and the number of shifts (embedding dimension \(m\)) should be chosen such that the individual coordinates are sufficiently independent and their entirety spans a multi-dimensional vector space in which the information contained by the original time series is completely unfolded among the different dimensions.

Utilizing the multivariate time series originated from time delay embedding, we can characterize the geometric properties of the system under study in the reconstructed phase space. There exist multiple complementary approaches to obtain useful information from this geometric perspective.

In the 1980s, in the field of dynamical systems, and in parallel also in the framework of fluid turbulence, different measures of complexity and scale-invariant features of physical systems have been introduced. They are mainly based on the concept of scaling, recalling the definition of a fractal, i.e., a geometric object displaying self-similarity and fine structure at small scales. These measures, usually called fractal dimensions, account for how the details change with the scale we are looking for. From a mathematical point of view, fractals are described via the scaling properties of a partition function based on a coarse-grained invariant measure in the probability space. Specifically, if we have a support \(\varOmega \) and we define a positive measure \(\mu \) (usually the probability of finding a portion of the state space filled by points), the partition function \(\varGamma (\ell)\) is defined as

that, in the limit of \(\ell\rightarrow 0\) displays a scaling-law behavior as

with \(D\) being the so-called fractal or box-counting dimension and \(B_{x} (\ell)\) a box of size \(\ell\) centered at \(x\). The latter formalism be generalized to any statistical order \(q\) such that

where the \(D_{q}\) are called generalized fractal dimensions, accounting for the correlation integrals of \(q\)-tuplets of points in the state space. In detail, \(D_{0}\) is the fractal (capacity or box-counting) dimensions, \(D_{1}\) is the information dimension, while \(D_{2}\) is the correlation dimension, accounting for the minimum number of system variables required to reconstruct the full dynamics of the system in its phase space. The box dimension \(D_{0}\) informs us on the geometrical features of the phase-space, indicating the minimum number of independent directions to be taken into account for confining the phase-space in a given geometrical shape. In the case of self-similar signals it relates to the Hausdorff dimension and to the Hurst exponent, informing us on the persistence/anti-persistence nature of the system. The information dimension \(D_{1}\) is a measure of the randomness of the distribution of points in the phase-space, strictly linked to the concept of loss of information, i.e., for how long the system can be accurately predicted. The correlation dimension \(D_{2}\) instead is a measure of the minimum number of variables (i.e., degrees of freedom) needed to properly describe the system.

If all \(D_{q}\) take the same values, we call the system under study mono-fractal, otherwise multifractal. Multifractal dynamics often co-occurs with intermittency phenomena, which are rather typical in space physics (e.g. the solar wind).

Another way to exploit the geometric properties of the multidimensional trajectory in the reconstructed phase space is studying the recurrence patterns of previously visited states in time. Here, recurrence refers to the close encounter of a previous state \(x\), typically defined as two states on the trajectory having a spatial distance smaller than some predefined threshold \(\varepsilon \). This concept of recurrence defines a binary relation between pairs of state vectors that can be visualized in terms of a so-called recurrence plot (Eckmann et al. 1987), where the morphology and statistics of patterns consisting of recurrent pairs can be used for defining a variety of complexity measures that can serve as proxies for predictability, determinism or laminarity, to mention only a few (Marwan et al. 2007). The density of recurrent pairs in dependence of \(\varepsilon \) corresponds to the aforementioned correlation integral, and its characteristic scaling exponent provides an estimator for the correlation dimension \(D_{2}\). In addition, it has been shown that characterizing the geometry of recurrent state pairs in the multidimensional phase space in terms of the topological characteristics of the resulting random geometric graph (Donner et al. 2011b; Donges et al. 2012) results in additional useful measures of complexity, which have been successfully employed in past studies to differentiate between the nonlinear fluctuation characteristics of geomagnetic indices during magnetic storms and quiescent periods (Donner et al. 2018, 2019).

While previous fractal dimension estimates have been based on the concept of scaling in phase space, in recent years, based on the concept of recurrences, Lucarini et al. (2012) introduced two dynamical systems metrics. One is the instantaneous dimension \(d\), accounting for the active number of degrees of freedom, and the other is the inverse persistence \(\theta \), measuring the short-term stability of the state space trajectory. Let us assume to have a trajectory in the state space \(x(t)\) and \(x(t^{*})\) to be a reference state of the system, i.e., a given configuration of the near-Earth electromagnetic environment (as an example, the quiet magnetosphere). If we define \(g(t^{*})\) as the Euclidean distance between \(x(t)\) and the reference state \(x(t^{*})\), the probability of logarithmic returns in a sphere centered at \(t^{*}\) and of radius \(r\) is a generalized Pareto-like distribution whose parameters are directly linked with \(d\) and \(\theta \). In the context of near-Earth electromagnetic environment studies these metrics have been employed by Alberti et al. (2022) to investigate the recurrence statistics of the AL and SYM-H indices. The main result is the clear dependence of the number of active degrees of freedom on the geomagnetic activity. In particular, during substorms the number of degrees of freedom increases at high latitudes; conversely, during a geomagnetic storm low-latitude exploits a reduced number of degrees of freedom. These two opposite behaviors can be related to the fast relaxation processes occurring in the magnetotail and the solar wind driving effect.

2.4 Information-Theoretic Approaches

Usually, temporal recordings of some system observables are our only asset to study the system of interest. Such time series may be univariate in the scalar case or multivariate when we deal with more than one observable. The aim is to understand the underlying dynamics of the system by analysing such deterministic or stochastic time series and extracting information from them. To quantify their inherent information content, a wide range of information-theoretic measures have been introduced. Univariate measures usually try to characterize the complexity of the dynamics like Shannon entropy (Shannon 1948) that shares the formalism with the Boltzmann-Gibbs entropic form, Renyi entropy (Rényi 1961), nonextensive entropy (Havrda and Charvát 1967; Daróczy 1970; Cressie and Read 1984; Tsallis 1988), Fisher information, Approximate entropy, Sample entropy and Fuzzy entropy (Balasis et al. 2013). However, in multivariate time series, one can ask whether different dynamical variables evolve independently or are mutually dependent, or whether one variable can influence other variables of the system. Studying such dependencies between different variables is also an important area of research, and can be related to questions of correlation and causality.

2.4.1 Entropies and Measures of Complexity

In spite of the immense success of many nonlinear time series analysis approaches with their broad range of applications, they often suffer from the domain of their applicability and their necessary assumptions, such as equilibrium-nonequilibrium, stability-instability, linearity-nonlinearity conditions, etc. As we mentioned before, entropy as a measure of the amount of uncertainty in statistical physics, has proved itself as significant in understanding various systems. In 1948, Shannon introduced a similar statistical concept to investigate the information size of a transmitted message (Shannon 1948), called information or Shannon entropy. For a discrete random variable \(X\) with a set of values \(\varXi \), the Shannon entropy \(H ( X )\) is defined as

where \(p ( x ) = \operatorname{Pr}\{X=x\}\), \(x \in \varXi \) is the probability distribution function of \(X\). Since this information entropy is not constrained by the aforementioned particular assumptions needed to study the underlying dynamics of a system, it opened new paths of research and played a significant role in the introduction of new statistical techniques (Kullback 1997). In the late 1950s, Kolmogorov and Sinai demonstrated that using the information theory of Shannon, a nonlinear dynamical process can be characterized by an entropy measure called the Kolmogorov-Sinai entropy (KSE). This entropy is suitable for classification of dynamical systems and is related to the sum of the positive Lyapunov exponents of the system and indicates that a nonlinear dynamical system can be represented as an information source (Billingsley 1965). Thus, information-theoretic measures may help to better understand dynamical processes. Indeed, as information can be representative of any physical quantities, the language of information theory proves to be powerful in investigating different complex dynamical systems (Brillouin 2013).

Renyi entropy (Rényi 1961) is a generalization of the Shannon entropy, defined as

where \(q\) is a real parameter characterizing the weights of rare and frequent events, allowing for scaling analysis of the system. Note that the Shannon entropy is a special case of \(H_{q}\) for \(q \rightarrow 1\). For example, Von Bloh et al. (2005) investigated the long-term predictability of global mean daily temperature data using the spatial patterns of the second order Renyi entropy \(H_{2}\).

The most generalized and physically constistent nonextensive entropy follows the formulation of Havrda and Charvát (1967), Daróczy (1970) and Tsallis (1988), which may be found as nonextensive, Tsallis, \(q\)-, or kappa entropy, can be considered as a generalization of the Boltzmann-Gibbs entropy in statistical physics, and is defined as follows:

where \(k\) is a positive constant that includes the Boltzmann’s constant, and \(q\) is a real parameter that characterizes the degree of non-extensivity. For \(q \rightarrow 1\) one can recover the Boltzmann-Gibbs entropy, which is a thermodynamic analogy of the Shannon entropy. Tsallis entropy has been widely applied in various fields of research (Tsallis 2009). For example, Balasis et al. have applied nonextensive entropy to quantify dynamical complexity of magnetic storms and solar flares (Balasis et al. 2011b) and of time series of the disturbance storm time index (Balasis et al. 2008).

In 1925, Fisher introduced a measure of the amount of information that can be obtained from a set of measurements (Fisher 1925), called Fisher information. One can write the Fisher information in the discrete form as

where \(x_{n}\) is the random variable \(X\) at time \(n\), \(p ( x_{n} )\) is its probability and \(N\) is the total number of time steps. Fisher information has proved itself as a powerful method to study various non-stationary and nonlinear time series (Martin et al. 1999). For example, it has been used to detect dynamical complexity changes associated with geomagnetic jerks (Balasis et al. 2016).

To compare deterministic and stochastic systems, Pincus introduced a new statistic for experimental time series, called approximate entropy, which is a parameter that measures correlation or persistence, i.e., low values of approximate entropy indicates that the system is very persistent, repetitive and predictive, while high values represent high randomness (Pincus 1991). Sample entropy is a modification of the approximate entropy, proposed in Richman and Moorman (2000). Both approximate and sample entropy have been used in various fields. For example, they have been applied in Balasis et al. (2013) to extract the complexity dissimilarity between different states of the magnetosphere.

Fuzzy entropy is a non-probabilistic concept that quantifies unpredictability of a time series. Fuzzy entropy is a measure of fuzzy information of a fuzzy system which is quite different from the Shannon entropy which is based on probability. Indeed, the uncertainty in fuzzy entropy arises from fuzziness, in contrast to the Shannon entropy where the uncertainty comes from the randomness (Al-Sharhan et al. 2001). Fuzzy entropy can be written as

where \(\mu _{i}\) is the \(i\)-th Fuzzy membership function. Similar to approximate and sample entropies, Balasis et al. also successfully applied Fuzzy entropy to extract the complexity dissimilarity among different magnetosphere states (Chen et al. 2007, 2009; Balasis et al. 2013).

If applied to simple state discretizations of a univariate time series, the aforementioned entropy concepts characterize merely higher-order distributional properties. This changes distinctively if state definitions are taken into account that explicitly include some type of dynamical perspective, e.g. by studying blocks of discretized states (Ebeling and Nicolis 1992) or their ordinalpatterns (Bandt and Pompe 2002). From the scaling of entropic characteristics with increasingpattern length, it is possible to distinguish complex deterministic from stochastic dynamics. Moreover, there exists a vast range of statistical complexity measures that can be defined using entropy concepts. Combining entropy and associated complexity characteristics in a two-dimensional plain (e.g. the complexity-entropy causality plain or the Shannon-Fisher plain) provides an effective means to distinguish chaos from stochastic motion (Rosso et al. 2007, Ribeiro et al. 2017).

2.4.2 Information Theoretic Measures of Statistical Association

The classical cross-correlation function provides a standard way to measure the similarity between the time series of two variables \(x(t)\) and \(y(t)\). We first define the time series \(\delta x ( t ) =x ( t ) - \overline{x}\) as the deviation from the time average (indicated by the horizontal bar; similarly for \(y(t)\)). Also the standard deviation of \(x(t)\) is given by \(\sigma _{X} = \sqrt{\overline{(\delta x)^{2}}}\). Then the correlation function is

Note that the correlation is a function of the lag \(\tau \) of time series \(y(t)\) in reference to time series \(x(t)\). While this fundamental measure is restricted to linear dependencies between the two variables, several information-theoretic measures have been developed to provide thorough generalizations, including mutual information, conditional mutual information and transfer entropy.

The average amount of common information, contained in two random variables \(X\) and \(Y\), is quantified by the mutual information \(I ( X;Y )\), defined as

where \(H ( X,Y ) =- \sum_{x \in \varXi} \sum_{y \in \Upsilon} \log p ( x,y ) \log p ( x,y )\) is the joint entropy and \(p ( x,y )\) is the joint probability density function of \(X\) and \(Y\). Thus, by substituting the definitions of \(H ( X )\) and \(H ( X,Y )\) in \(I ( X;Y )\), one gets

which is an average over \(\log \frac{p ( x,y )}{p ( x ) p ( y )}\). This indicates that if two variables \(X\) and \(Y\) are independent, i.e., \(p ( x,y ) =p ( x ) p ( y )\) then the mutual information \(I ( X;Y )\) tends to zero. In other words, if \(X\) and \(Y\) are dependent, then \(I ( X;Y ) >0\). In fact, \(I ( X;Y )\) can be considered as a generalized measure of (cross) correlation between two variables \(X\) and \(Y\) since, unlike the common correlation measures that are only able to detect linear dependency, mutual information is capable of capturing the general dependency among variables, including non-linear statistical associations. Note that \(I ( X;Y )\) is a symmetric measure under the exchange of \(X\) and \(Y\) and thus cannot be used as an appropriate causality measure straight away.

Paluš (1996) introduced an information theoretic measure for classification of complex time series based on auto-mutual information, characterizing the decay of \(I [ x ( t );x ( t+\tau ) ]\), called coarse-grained entropy rates. This measure quantifies the mutual information between time series \(x\) at time \(t\) and its time-lagged version, i.e., \(x\) at time \(t+\tau \) for a time lag \(\tau >0\). This measure can be considered as a relative measure of regularity and predictability and also is related to Kolmogorov-Sinai entropy for dynamical systems processes. For instance, it has been used to classify some physiological signals like electroencephalography (EEG) and tremor (Paluš 1996).

By investigating the entropy rate of a Gaussian process, Paluš (1997) developed a measure capable of distinguishing and characterizing different states of chaotic dynamical systems, called Gaussian process entropy rate. This is indeed a power-spectrum based entropy-like functional and is related to the Kolmogorov-Sinai entropy of a dynamical system. Such an entropy rate has been applied in meteorological time series to extract the system complexity (Paluš et al. 2011), for example.

2.4.3 Causality Among Time Series

A popular information-theoretic functional used for inferring causality under appropriate assumptions is the conditional mutual information (CMI) \(I ( X;Y \vee Z )\) of the variables \(X\) and \(Y\) given the variable \(Z\), defined as

If \(Z\) is independent of \(X\) and \(Y\), then \(I ( X;Y \vee Z ) =I ( X;Y )\), demonstrating that \(I ( X;Y \vee Z )\) extracts the net shared information between \(X\) and \(Y\) beyond the information of another variable \(Z\). In order to extract the possible time-delayed relationships among different variables, one should work with time delayed versions of the abovementioned measures. For example, if there is a time delay \(\tau \) between the two variables \(X\) and \(Y\), one can define a time-delayed mutual information as \(I [ X ( t );Y ( t+\tau ) ]\), which measures the average amount of information contained in the process \(X\) about the \(\tau \)-future of the process \(Y\). However, there is also information about the \(\tau \)-future of \(Y\) contained in \(Y\) itself, if \(X\) and \(Y\) are not independent. In this respect, the net information about the \(\tau \)-future of the process \(Y\) which is contained in the process \(X\) can be obtained by the conditional mutual information \(I [ X ( t );Y ( t+\tau ) \vee Y ( t ) ]\) (Paluš et al. 2001). This measure can extract the coupling direction in bivariate dynamical systems and indeed is a nonlinear generalization of the Granger causality (Granger 1969). Schreiber (2000) introduced a measure using the idea of finite-order Markov processes known as the transfer entropy, which is an equivalent expression for the time-delayed conditional mutual information defined above.

Note that in empirical experiments usually only one possible dimension of the phase space is observed for each system. In such situation, by drawing upon phase space reconstruction by means of time delay embedding, the time-delayed CMI defined above can be reformulated as

where \(\eta \) and \(\rho \) are time-lags used for the embedding of variables \(X ( t )\) and \(Y ( t )\), respectively. Note that here only the information about a single component of \(y ( t+\tau )\) in the \(\tau \)-future of the system \(Y\) is used for simplicity. Further extensive numerical analysis (Paluš and Vejmelka 2007) suggest that often a CMI in the form

is sufficient to extract the direction of coupling among \(X ( t )\) and \(Y ( t )\), i.e., the dimensionality of the condition must contain full information about the state of \(Y ( t )\), while single components \(x ( t )\) and \(y ( t+\tau )\) are able to provide information about the coupling direction from \(X\) to \(Y\), denoted as \(X \rightarrow Y\). Simply, one can obtain the causal link \(Y \rightarrow X\) as

However, Paluš (2014) criticized that, in general, the prediction horizon \(\tau \) in such equations cannot well demonstrate a time-delayed coupling. Wibral et al. (2013) proposed a solution by a simple reformulation as

Under certain assumptions (Runge et al. 2019a) these measures can detect the presence of causal relationships. In Runge et al. (2019b) a systematic causal discovery algorithm for time series has been presented that can be used together with conditional mutual information. This algorithm was generalized in Runge (2020) to also detect instantaneous causal relations, and in Gerhardus and Runge (2020) to also account for hidden common causes. These methods have great potential for further studies of causal relations among geomagnetic processes.

As an example, the conditional mutual information has been applied to investigate the causal information transfer between solar wind parameters and geomagnetic indices (Manshour et al. 2021). Specifically, in order to find the causality directions as well as the presence of any information transfer delay between the solar wind and the geomagnetic indices, the conditional mutual information was estimated between the vertical component of the interplanetary magnetic field \(B_{z}\) as a solar wind parameter and two well-known geomagnetic indices representing the auroral electrojet (AE), a substorm index, and the SYM-H, a storm index, using \(\rho =5\) samples (25 min) and \(n=3\) as the embedding parameters. In order to compute the statistical significance of those calculations, the circular time-shifted surrogates test was applied by comparing the empirical results with the average values of a set of 100 different realizations of the surrogates (Manshour et al. 2021).

Figure 1(a) demonstrates that a strong causal link exists from \(B_{z}\) to AE and also the information transfer takes two sample time steps (10 min). However, Fig. 1(b) indicates that there is no causal relationship from AE to \(B_{z}\). Similarly, we plotted CMI for the time series of \(B_{z}\) and SYM-H in Fig. 1(c) and (d). Figure 1(c) indicates that a causal relationship also exists from \(B_{z}\) to SYM-H; and the information transfer takes six sample time steps (30 min). Also, there is no causality in the reverse direction, as represented in Fig. 1(d). Our findings confirm that the interplanetary magnetic field component \(B_{z}\) drives both geomagnetic storms and substorms with different delays. The response time for the magnetic storms is longer than the time delay among the solar wind energy input and the energy release in the magnetotail during a substorm (Maggiolo et al. 2017) since it takes a considerably long time to inject particles into the ring current region (Daglis et al. 1999).

(Fig. 3 from Manshour et al. 2021) The coupling directions of (a) \(Bz\rightarrow AE\), (b) \(AE\rightarrow Bz\), (c) \(Bz\rightarrow SYM-H\), (d) \(SYM-H\rightarrow Bz\), (e) \(AE\rightarrow SYM-H\), and (f) \(SYM-H\rightarrow AE\). (g) and (h) are the coupling directions similar to (e) and (f), by taking \(B_{z}\) as the common driver. Also (i) and (j) are the coupling directions similar to (c) and (d), by taking AE as the common driver. The red lines and error bars present mean and ±2 standard deviations of CMI for a set of 100 circular time-shifted surrogates

The presence of any possible causal relationship between substorms and storms has been an open and challenging debate in space weather studies. Here, to analyze the presence of information flow between the geomagnetic indices, we further plotted in Fig. 1(e) and (f) the CMI of AE and SYM-H. Figure 1(e) shows that there is a strong flow from AE to SYM-H; which means that geomagnetic storms are driven by substorms without any delay. No information flow is detected from storms to substorms, as can be seen in Fig. 1(f). This result is indeed in line with some previous studies (Stumpo et al. 2020; Ganushkina et al. 2005). However, we demonstrate that the observed causality in Fig. 1(e) is not a direct causal link, and it indeed arises according to the presence of the common driver \(B_{z}\). To show this, we consider the effects of this common driver in CMI calculations. We can simply include \(B_{z}\) as the third variable into the condition, as

Figure 1(g) and (h) represent this CMI, and interestingly we discover that there is no information flow between AE and SYM-H when eliminating the role of the common driver \(B_{z}\). Furthermore, we also check the possible role of AE on the observed information flow among \(B_{z}\) and SYM-H, by interchanging the variables of \(B_{z}\) and AE. Figure 1(i) and (j) asserts that the information flow of \(B_{z}\) into SYM-H is independent of the AE index. Briefly, our results suggest that the observed information flow from geomagnetic substorms into storms is induced by the common solar wind driver \(B_{z}\) and in fact, there is no causal relationship between substorms and storms, which is in agreement with some previous studies (Gonzalez et al. 1994; Kamide et al. 1998; Runge et al. 2018). Runge et al. (2018) identified \(B_{z}\) and other confounders of AE and SYM-H by applying a systematic information-theoretic causal discovery algorithm. However, it should be noted here that in-situ observations have shown that the contribution of ion injections to the ring current energy gain is substantial, despite the fact that our results for the specific time scales considered and datasets examined do not favor the role of substorms in the enhancement of the storm-time ring current through accumulative ion injections during consecutive substorms (see also the references in Runge et al. 2018). Moreover, in contrast to our results, substorm bursty-bulk flows (BBFs) accompanied by strong convection may penetrate the inner magnetosphere and contribute to the ring current.

2.5 Time Series Decomposition

Physical systems and natural processes are usually characterized by a chaotic and unpredictable behaviour, typically displaying multiscale interacting components. This is manifested in the existence of some scaling-law behaviour, reflecting their (multi)fractal nature. Moreover, they are also characterized by bifurcations between different states, strange attractors, and invariant manifolds, reflecting the different dynamical regimes of the system. Thus, a first step towards characterizing these features relies on decomposition methods. These methods are usually classified into adaptive and non-adaptive/fixed-basis concepts: the latter presents a strong mathematical background, while the former detects structures embedded with no a priori assumptions.

2.5.1 Wavelet Analysis

Fourier transform (FT), a cornerstone of time series analysis, is a commonly used technique to convert a signal in time domain into a frequency domain representation by decomposing it in terms of sinusoidal components (basis functions) spanning the whole spectrum. FT captures only global frequency information, averaged over the entire time period of observations. Thus, if frequency components of a time series vary over time, FT cannot identify those localized features of the series. In order to capture the frequency information localized in time, one can find a sequence of FT of a windowed signal, known as short time Fourier transform (STFT). However, it still suffers from some limitations such as using a window of constant shape and size as well as non-localized sinusoidal basis functions, so that it cannot capture the characteristics of sharp events or those with different durations. On the other hand, due to the Heisenberg’s uncertainty principle, STFT cannot generate features with both time and frequency instantaneous localization.

Wavelet Transform (WT) tries to overcome the STFT shortcomings and is a powerful technique for analyzing localized variations of power within a time series (Addison 2017). Similar to STFT, WT is a method to convert a one-dimensional time domain signal into a two-dimensional time-frequency domain signal, but in contrast to STFT, the wavelet algorithm uses basis functions with transient nature, called mother wavelets, which are not restricted to a single family of functions (like sinusoidal functions in STFT) and have a wave-like nature that is localized in time, capable of locating the occurrence of a sharp event in the time domain. The wavelet functions can be obtained as a linear combination of scaled and shifted mother wavelets.

In general, a wavelet transform can be either continuous or discrete. At first, we briefly describe the continuous wavelet transform (CWT) algorithm. The function \(\Psi \) can be a mother wavelet if it satisfies two conditions. It must have zero-mean

and finite energy (or, equivalently, must be square integrable), i.e.,

As we mentioned above, a wavelet is constructed from a scaled and shifted mother wavelet \(\Psi \) as

where \(\theta \) and \(s\) are shift and scale parameters, respectively. In fact, \(\theta \) indicates the location of the wavelet in time and if \(s>1\) the wavelet is stretched along the time axis, whereas if \(0< s<1\), the wavelet is contracted. The CWT of a time series \(x(t)\) in a given location and scale can be obtained by projecting \(x(t)\) onto the corresponding wavelet as:

where (∗) indicates the complex conjugate. In fact, CWT provides the local similarity (or correlation) of a particular section of a signal and the corresponding wavelet, thus by changing the shift and scale parameters one can construct a two-dimensional picture representing the amplitude of any features versus the scale (or frequency) as well as the time resolution of this amplitude. If the mother wavelet also satisfies the admissibility condition of

where \(\tilde{\Psi} (\omega )\) denotes the Fourier transform of \(\Psi (t)\), one can reconstruct the original time series by using the inverse wavelet transform as

Another form of wavelet analysis is the discrete wavelet transform (DWT), in which the scale and shift parameters are discrete. For example, in the dyadic DWT the scale takes values of the form \(s= 2^{k}\) where \(k\) is an integer and at a given scale \(s\), the shift parameter takes values of the form \(\mu = 2^{k} l\) where \(l\) is also an integer number. Accordingly, discrete wavelets are defined as

The DWT of a signal \(x(t)\) is

The original signal \(x ( t )\) can also be reconstructed using the inverse wavelet transform as

if the discrete wavelets form an orthogonal basis, i.e.,

where \(\delta _{lk}\) denotes the Kronecker’s delta function. Due to the discrete nature of the parameters in the DWT, localization of transient features or characterization of oscillatory behaviours is much harder than in the CWT. On the other hand, only a restricted number of admissible wavelets are available in DWT when compared with CWT. However, the algorithm of DWT is simple and computationally more efficient than CWT.

Wavelet transform has widely been applied in science, engineering, medicine and finance (Addison 2017), and it has already proved itself as a powerful tool in geophysical research, including geospace disturbances (Katsavrias et al. 2022 and references therein), synchronization between wavelet modes of North Atlantic Oscillation index, the time series of sunspot numbers, the geomagnetic activity aa index and near surface air temperature (Paluš and Novotná 2009), the magnetic storm activity (Xu et al. 2008; Mendes et al. 2005; Wei et al. 2004), self-affine properties of geomagnetic perturbations (Zaourar et al. 2013), external source field in geomagnetic signals (Kunagu et al. 2013), seasonal variations in the Ap index (Lou et al. 2003), the effects of the interplanetary magnetic field polarities on geomagnetic indices (El-Taher and Thabet 2021), the interrelationship between solar wind coupling functions and the geomagnetic indices Dst and AL (Andriyas and Andriyas 2017), variation of relativistic electrons in the outer radiation belt (Katsavrias et al. 2021), and the causal relations between the magnetosheath pressure and the waves observed in the magnetosphere (Archer et al. 2013).

2.5.2 Singular Spectrum Analysis

Singular spectrum analysis (SSA) is a nonparametric and data-adaptive method that addresses a variety of problems in time series analysis including forecasting, imputation of missing values, and reducing the dimensionality by decomposing a time series into a small number of interpretable components such as trends, oscillatory behaviours and noise (Elsner and Tsonis 1996; Golyandina et al. 2001). SSA contains elements of signal processing, linear algebra, nonlinear dynamical systems, ordinary differential equations, and functional analysis (Golyandina et al. 2001). As a powerful tool for time series analysis, it has frequently been applied in a broad variety of fields such as oceanology (Colebrook 1978), meteorology and climatology (Ghil and Vautard 1991; Keppenne and Ghil 1992; Yiou et al. 1994; Allen and Smith 1994; Ghil et al. 2002), nonlinear physics and signal processing (Broomhead and King 1986), economy (Hassani and Zhigljavsky 2009), and social sciences (Golyandina et al. 2001) among others.

The fundamental step in SSA is an orthogonal decomposition of a covariance matrix of the studied time series into its spectrum of eigenvalues as well as its orthogonal eigenvectors. One obtains linearly independent individual data components (modes) by projecting original data onto these orthogonal eigenvectors. In fact, the basic univariate SSA steps can be described as follows: Let a univariate time series \(y_{i}\), \(i = 1,\ldots, N_{0}\), be a realization of a stationary and ergodic stochastic process \(\{ Y_{i} \}\). We map the original time series \(y_{i}\) into a sequence of \(d\)-dimensional lagged vectors of \(\boldsymbol{x}_{i}\) with components \(x_{i}^{k} = y_{i+k-1}\) where \(k=1,\dots ,d\) and \(i=1,\ldots, N= N_{0} -d+1\). The sequence of the vectors \(\boldsymbol{x}_{i}\) is usually referred to as the \(N\times d\) trajectory matrix \(\boldsymbol{X} =\{ x_{i}^{k} \}\), which contains the complete record of patterns that have occurred within a window of size \(d\) (embedding dimension). Suppose that the original time series \(y_{i}\) results from a linear combination of \(m< d\) different dynamical modes. Then, ideally, the trajectory matrix \(\boldsymbol{X}\) has rank \(m\), and can be transformed into a matrix with only \(m\) nontrivial linearly independent components. Instead of the \(N\times d\) matrix \(\boldsymbol{X}\), it is more appropriate to decompose the symmetric \(d\times d\) lagged-covariance matrix \(\boldsymbol{C} = \boldsymbol{X}^{T} \boldsymbol{X}\), since \(\boldsymbol{X}\) and \(\boldsymbol{C}\) have the same rank. If the components \(\{ x_{i}^{k} \}\) have zero mean, then the elements of the covariance matrix \(\boldsymbol{C}\) can be written as

where \(1/N\) is a normalization factor. The symmetric matrix \(\boldsymbol{C}\) can be decomposed as

where \(\boldsymbol{V} =\{ v_{ij} \}\) is an \(d\times d\) orthogonal matrix and \(\boldsymbol{\Sigma} \) is a diagonal matrix of elements \(\sigma _{1}, \sigma _{2},\ldots, \sigma _{d}\). The elements \(\sigma _{k}\), \(k=1,\dots ,d\) are the non-negative eigenvalues of \(\boldsymbol{C}\) by convention given in descending order \(\sigma _{1} \geq \sigma _{2} \geq \cdots \geq \sigma _{d}\). The square roots of the eigenvalues, \(\sigma _{k}^{1/2}\), and the set of \(\{ \sigma _{k}^{1/2} \}\) are called singular values and singular spectrum, respectively, and give SSA its name. Simply, one can calculate the modes as \(\xi _{i}^{k} = \sum_{l=1}^{d} v_{lk} x_{i}^{l}\). If the rank of \(\boldsymbol{C}\) is \(m< d\), then \(\sigma _{1} \geq \cdots\ \geq \sigma _{m} > \sigma _{m+1} =\cdots = \sigma _{d} =0\). In the presence of noise, however, all eigenvalues are positive and we have \(\sigma _{1} \geq \cdots \geq \sigma _{m} \gg \sigma _{m+1} \geq \cdots \geq \sigma _{d} >0\) (Broomhead and King 1986). In fact, if we plot the eigenvalues, \(\sigma _{k}\), one can often observe an initial steep decline for \(k=1,\ldots, m\) and a nearly flat line for \(k=m+1,\ldots, d\), thus the corresponding modes, \(\xi _{i}^{k}\), are considered as the signal part and the noise part, for the former and the latter case, respectively. In this respect, the signal modes can be used to reconstruct the denoised signal \(\tilde{x}_{i}^{k}\) as

Analogously, the original time series \(x_{i}^{k}\) can be reconstructed back from the modes as

The modes \(\xi _{i}^{k}\) can also be considered as time-dependent coefficients and the orthogonal vectors \(\{ v_{l}^{k} \}\) as basis functions, also known as the empirical orthogonal functions.

As mentioned above, distinguishing the signal components from noise is based on finding a threshold to a noise floor. This approach might be problematic if the noise present in the data is not white but colored or the signal-to-noise ratio is not large enough. For example, in the presence of red noise of \(1/f\) type power spectra, which is ubiquitous in geophysical processes, one cannot reliably detect a nontrivial signal only by comparing the eigenvalues, since the eigenvalues related to the slow modes are much larger than those of the fast modes. Hence, the eigenvalues of the slow modes might incorrectly be interpreted as a nontrivial signal, or, on the other hand, a nontrivial signal embedded in a red noise might be neglected if its eigenvalues are smaller than the slow-mode eigenvalues of the background noise.

To correctly distinguish a signal from red noise, a statistical approach called Monte Carlo SSA (MCSSA) has been proposed that utilizes the Monte Carlo simulation techniques to test the eigenvalues of the SSA modes against a red noise null-hypothesis (Allen and Smith 1996). Surrogate data complying with this null-hypothesis are constructed as realizations of an autoregressive process of order 1, reflecting the \(1/f\) character of the analyzed data spectrum. For each realization, a covariance matrix \(\boldsymbol{C}_{r}\) is computed and then projected onto the eigenvector basis of the original data. The statistical distribution of the diagonal elements of the resulting matrix, obtained from the ensemble of Monte Carlo simulations, gives confidence intervals outside which a time series mode can be considered significantly different from a generic red-noise process. An extension of MCSSA has been proposed by Paluš and Novotna (2004) to evaluate and test regularity of dynamics of the SSAmodes against the colored noise null hypothesis, in addition to the test based on eigenvalues. This enhanced MCSSA has successfully been applied in detection of oscillatory modes in records of the monthly North Atlantic Oscillation index, the time series of sunspot numbers, the geomagnetic activity aa index and near surface air temperature from several mid-latitude European locations (Paluš and Novotná 2008, 2009).

The SSA algorithm has also been extended in order to analyse multivariate time series in the presence of noisy and/or missing data (Allen and Robertson 1996). Similar to the univariate SSA, decomposition and reconstruction are two main ingredients in multi-channel singular spectrum analysis (mSSA). Accordingly, the multivariate trajectory matrix \(\boldsymbol{D}\) is constructed as \(\boldsymbol{D} =( \boldsymbol{X}^{1},\dots , \boldsymbol{X}^{L} )\) where \(L\) is the number of time series and \(\boldsymbol{X}^{\boldsymbol{i}}\) represents the trajectory matrix of the \(i\)-th time series. Then, the grand lagged covariance matrix is obtained as \(\boldsymbol{C}^{{G}} = \boldsymbol{D}^{T} \boldsymbol{D}\). After finding the corresponding eigenvalues and eigenvectors of the decomposed \(\boldsymbol{C}^{G}\), one can compute the reconstructed components of the original time series similar to the basic SSA algorithm described above. mSSA is an effective statistical data analysis method and has been applied to various fields including oceanography, geoscience, meteorology, among others (Vautard and Ghil 1989; Wang et al. 2016; Shen et al. 2017; Zotov et al. 2016; Zhou et al. 2018).

2.5.3 Empirical Mode Decomposition

Recently, a wide range of adaptive methods have been proposed (Chatfield 2016) and also applied in the field of geospace research (e.g., Balasis and Egbert 2006; Alberti et al. 2017, and references therein). Among them, particular attention has been paid to the Empirical Mode Decomposition (EMD) (Huang et al. 1998; Huang and Wu 2008). EMD is an adaptive method based on an iterative process known as sifting that exploits the local properties of signals to derive the decomposition basis. Given a signal \(y(t)\), the sifting consists of the following steps:

-

1.

derive a zero-mean signal \(r(t) = y(t) - \langle y(t)\rangle \), with \(\langle \dots \rangle \) denoting the time average;

-

2.

derive the local maxima and minima of \(r(t)\) and interpolate them separately via cubic splines to derive upper \(u(t)\) and lower \(l(t)\) envelopes;

-

3.

evaluate the mean envelope \(m(t) = (u(t) +l(t))/2\) and determine the detail \(h(t) = r(t) - m(t)\);

-

a.

if the numbers of extrema and zero crossings are equal or differ at most by one and if the mean envelope of \(h(t)\) has a zero mean, then \(h(t)\) is assigned to be an Intrinsic Mode Function (IMFu) or empirical mode;

-

b.

otherwise steps 1 to 3 are iterated n times until a candidate detail \(h_{n}(t)\) is assigned to be an IMFu.

-

a.

Step 1. to 3. are repeated on the residual \(r_{h}(t) = r(t) - h_{n}(t)\) until no more IMFus can be extracted. In the end, the signal \(y(t)\) can be written as the sum of all empirical modes \(\{c_{k}(t)\}\) and the final residue \(r(t)\), e.g., a non-oscillating function, as

where \(N_{{k}}\) is the number of detected IMFus. The set of \(\{c_{k}(t)\}\) is the basis of a Hilbert space, satisfying properties of completeness, convergence, and local orthogonality (Huang et al. 1998; Flandrin et al. 2004).

An example of the intrinsic mode functions obtained via the EMD procedure is shown in Fig. 2 for the geomagnetic storm occurred on 22 August 2005. As expected, the amplitudes of empirical modes show an increase in correspondence of the three geomagnetic storms. However, the empirical modes associated with the shortest timescales, i.e., \(C_{1}(t) - C_{8}(t)\), show irregular amplitude enhancements also before/after the storms (see, e.g., the time period from 10 to 15 August 2005), suggesting that at these timescales the dynamics of SYM-H may be not directly controlled by the external driving, i.e., not linearly correlated to interplanetary changes. Indeed, according to Alberti et al. (2017) the fluctuations at these timescales do not show a one-to-one coupling with solar wind and interplanetary parameters, indicating the occurrence of a nonlinear response at these timescales (see, e.g., Tsurutani et al. 1990).

(Fig. 4 from Alberti et al. 2018) The empirical modes (\(C_{{k}}(t)\)) and the residue (\(\operatorname{res}(t)\)) extracted from the SYM-H time series for the time period from 10 August 2005 to 10 September 2005. The empirical modes are ordered by increasing characteristic timescale (from top to bottom)

Once the decomposition basis is obtained, the local amplitude-frequency modulation can be derived via the Hilbert Transform (HT) defined as

where \(P\) denotes the Cauchy principal value. By defining a complex signal as

with

where \(a_{k} ( t )\) and \(\phi _{k} ( t )\) are the instantaneous amplitude and phase of the \(k\)-th empirical mode, respectively, a time-frequency-amplitude representation can be derived, usually known as Hilbert-Huang spectrum, by contouring the squared instantaneous amplitudes in a time-frequency plane (Huang et al. 1998). By integrating over time, the so-called Hilbert marginal spectrum can be derived, strictly related to the Fourier power spectral density, accounting for the second-order statistical moment frequency distribution (Consolini et al. 2017). It should be noted though that estimating the exponents that characterize scaling is challenging in real-world data, and requires a methodology that goes beyond Fourier approaches (Kiyani et al. 2009, 2013).

An example of the Hilbert marginal spectrum obtained from the analysis of solar wind turbulence and small-scale dynamics is shown in Fig. 3 (Consolini et al. 2017). Two different spectral regimes, characterized by different spectral exponents, can be identified above and below the spectral break \(f_{b} \sim 0.3~\text{Hz}\). This frequency break is compatible with the ion cyclotron frequency \(f_{\varOmega}\) considering the effect of the Doppler shift. Furthermore, below the frequency break all the spectra tend to the typical magnetohydrodynamic (MHD) Alfvénic turbulence spectrum (\(S(f) \sim f^{- 3/2}\)), while above \(f_{b}\), i.e., in the kinetic domain, the spectra are approximately \({\sim} f^{- 8/3}\). This value seems to be compatible with several different kinetic turbulent regimes (e.g., compressible Hall-MHD turbulence, EMHD-turbulence, kinetic Alfvén wave turbulence), which predict a spectral slope near \({\sim}{-}7/3\).

(Fig. 6 from Consolini et al. 2017) The marginal Hilbert energy spectra in the parallel and perpendicular directions to the mean field

As a conclusion of this part, we would like to summarize some of the advantages and limitations of the EMD. It is particularly suitable to reduce a priori assumptions on the functional form of the basis of the decomposition, carrying out local features from time series that cannot usually be obtained by using fixed eigenfunctions. However, as for each data analysis method some outstanding problems need to be listed.

-

1.

End/Boundary effects occur since the sifting is based on the local extremes of the time series. End points of the latter are clearly classified as local extrema. This can produce misleading empirical modes, propagating into the decomposition process through the sifting steps, since they are not the extreme values of the time series. To avoid this problem mirror and/or data extending methods (Huang and Wu 2008) have been proposed for a better spline fit at the ends.

-

2.

Mode mixing can take place if a similar scale is present in different empirical modes. This is related to the signal intermittency, aliasing the time-frequency distribution and devoiding empirical modes of physical meaning (Huang et al. 1998, 1999). One of the ways to avoid this problem is a noise-assisted sifting, known as Ensemble EMD. It consists of adding an ensemble of white noise series to the original data and use the EMD to decompose each time series. Then, the true empirical mode is the ensemble mean of the corresponding intrinsic mode functions of the decomposition.

2.5.4 Multiscale Fractal Measures of State Space Trajectories

One of the most intensively studied contemporary problems in nonlinear sciences is the investigation of the multiscale dynamical properties of time series. Recently, the previously described EMD has been used in combination with state space indicators to investigate scale-dependent properties of physical systems. As shown in Alberti et al. (2020a) multiscale measures can be derived by looking at the generalized fractal dimensions at different scales retrieved via the EMD algorithm. Let us assume that a signal \(s ( t )\) could manifest a multiscale behaviour

where \(\langle s\rangle \) is a steady-state average value and \(\delta s_{\tau} ( t )\) a fluctuation at scale \(\tau \). For each \(\sum_{\tau} \delta s_{\tau} ( t )\) a natural measure \(d \mu _{\tau} \) can be introduced in a way similar to the concept of scale-local Rényi dimensions (Grassberger 1985) as follows. We first consider a partition function

with \(B_{s,\tau} ( l )\) being the hypercube of size \(l\) centered at the point \(s\) on the space of the \(\sum_{\tau} \delta s_{\tau} \). Thus, the multiscale generalized fractal dimensions can be introduced as

This approach, similarly to the partial dimensions proposed by Grassberger (1983), allows us to detect information on the state space topological properties by investigating the behaviour of the generalized dimensions at different scales \(\tau \). Furthermore, via the Legendre transform we can evaluate the multiscale singularities and singularity spectrum as

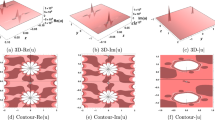

Figure 4 reports an exemplary application of this formalism to the analysis of the SYM-H geomagnetic index. It is interesting to observe the existence of a multifractal nature at all scales, although different values are found for the multiscale generalized fractal dimensions \(D_{{q, \tau}}\). Indeed, a more regular behaviour is found at long timescales, mainly related to the solar wind variability and to the nonlinear response of the magnetosphere to solar wind changes. Conversely, the short timescale dynamics, that can be related to the internal dynamics of the magnetosphere, is characterized by larger dimensions, reflecting a stochastic nature of fluctuations. Furthermore, by reconstructing the phase space we can underline two different regions: one corresponds to the quiet-time values of the SYM-H index and the other to the disturbed-time ones. By using the short-scale reconstruction of empirical modes it does not quite reproduce the phase space dynamics, suggesting that some relevant information is missed. By providing instead reconstructions of IMFs on large timescales we are able to cover those phase space regions which have not been captured by the fast component. This can be interpreted as a signature of the existence of a different origin of processes operating on short and long timescales.

(Fig. 14 from Alberti et al. 2020a) Multifractal analysis of the Dst index

2.6 Multiscale Stochastic Approaches in Geospace Research

Interpreting the geospace variability in terms of a dynamical system, whose dynamics spans a wide range of scales with a clear separation between fast and slow processes, is one of the most promising aspects for understanding and predicting its variations. In the simplest way a non-linear stochastic model, based on a generalized Langevin equation, could be feasible. This approach, extensively used in the climate framework (see, e.g., Ditlevsen 1999; Kwasniok and Lohmann 2009; Livina et al. 2010; Alberti et al. 2014), is based on a 1-dimensional system, described by a continuous system variable \(x ( t )\), whose slow dynamics is driven by a forcing term \(F ( x )\), while the fast dynamics is described in terms of a noise process \(\eta ( t )\) of amplitude \(\sigma \). The Langevin equation reads

where \(U ' ( x ) =-F ( x )\). From a dynamical system point of view, \(U ( x )\) captures all the statistical properties of the system, since it can be linked to the probability distribution function of the system variable \(x\). Indeed, in this case the Fokker-Planck equation now reads

In this case, the stationary solution for the probability distribution, \(p_{s} ( x )\), is a direct function of the state function \(U ( x )\), being

Then, once the stationary distribution function \(p_{s} ( x )\) is known, by inverting the above relation it is possible to get the state function \(U ( x )\) responsible for the slow dynamics as

The stationary solution is also an equilibrium solution (Ichimaru 1973), so that the state function \(U ( x )\) can be used to investigate the number and the nature of the available system states. Indeed, having even-order state functions (i.e., with positive curvature at both minus and plus infinity), the number of zero crossings of the first derivative of \(U ( x )\) corresponds to the number of system states, while the sign of the second derivative evaluated at the zeros of \(U ' ( x )\) characterizes their nature (positive: stable, negative: unstable) (Ditlevsen 1999; Kwasniok and Lohmann 2009; Livina et al. 2010; Alberti et al. 2014).

3 Contributions to Substorm Research

3.1 Nonlinear and System Science Approaches to Substorms

Substorms share basic characteristics with driven, dissipative systems that are far-from-equilibrium, and as such suggest points of contact with the wider field of non-equilibrium statistical physics. The magnetosphere is a driven system with capacity for mass, momentum and energy storage and release on multiple scales: (i) microscales, where an instability occurs in a background plasma that can be treated as approximately uniform, (ii) mesoscales, where the variation in the background is relevant, and (iii) macro- or system scales, there the process spans the entire magnetosphere, as in a geomagnetic substorm.

Many of the approaches and physical models that have been suggested for substorms have heritage in non-linear and complex systems modeling. We can chart the development of this strand of substorm research across three broad categories:

-

1)

Low-dimensional and in a smoothed sense deterministic

-

2)

High dimensional and intrinsically stochastic

-

3)

An emergent transition from a system dominated by processes on the micro and mesoscale, to one characterized by large scale system-spanning coherent structure

3.2 Low-Dimensional and Deterministic